In today’s Digital Age, we often tend to forget the extraordinary journey that computing has taken to reach its current state. While contemporary tech giants like Steve Jobs, Bill Gates, and Mark Zuckerberg grab the spotlight, the true pioneers of computing have remained mainly unsung. In this blog post, we will explore history to celebrate the unsung heroes of computing – those visionary minds who laid the foundation for the digital revolution we enjoy today.

Table of Contents

Who is considered the father of computing?

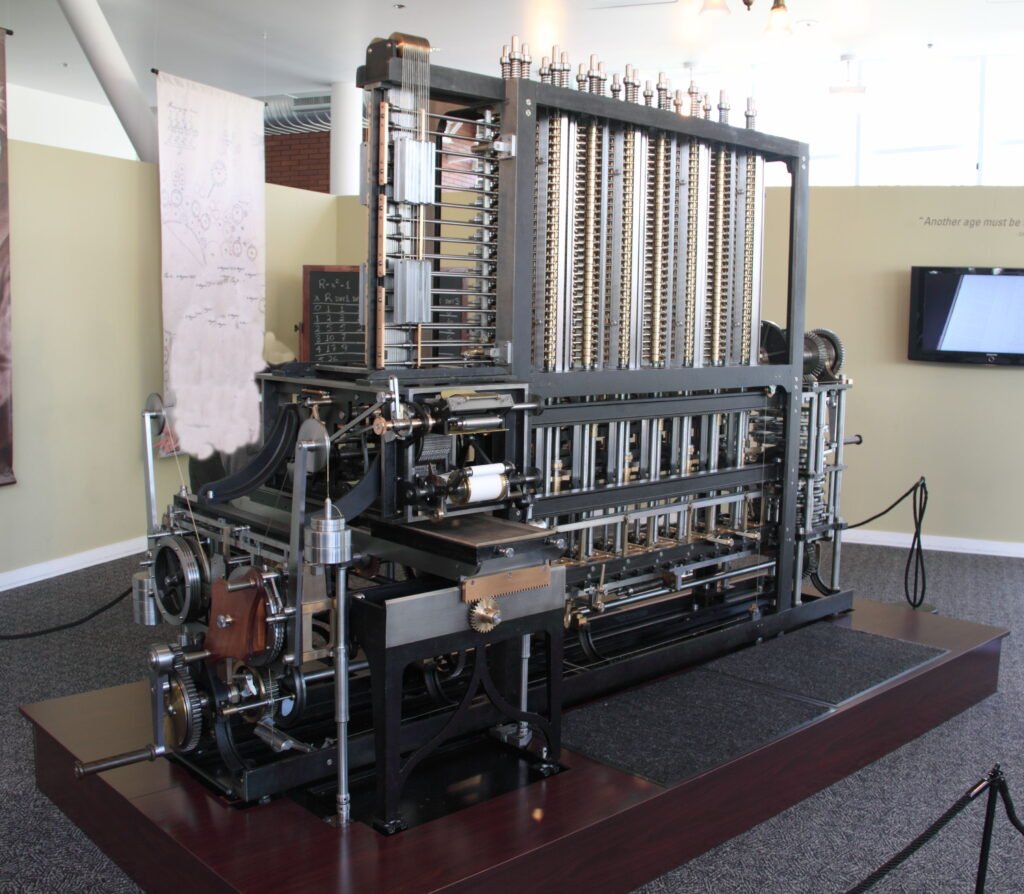

The title “father of computing” is often attributed to Charles Babbage, an English mathematician and inventor. He is renowned for conceptualizing and designing the Analytical Engine in the early 1830s, a mechanical, general-purpose computing machine that laid the foundation for modern computers. Although the Analytical Engine was never built during Babbage’s lifetime due to technological limitations of the era, his visionary ideas and pioneering work in computation earned him the honorific title of “The Father of the Computer.” Babbage’s contributions significantly influenced the development of computer science and computing technology as we know it today.

Who are the three fathers of AI?

The field of artificial intelligence (AI) has seen contributions from numerous pioneers over the years. Still, three individuals are often referred to as the “fathers of AI” due to their significant early work in the field:

Alan Turing

Alan Turing, a British mathematician and computer scientist, is widely recognized for his pioneering work in theoretical computer science and AI. His concept of the Turing machine, developed during World War II, laid the foundation for the academic study of computation. While he didn’t directly work on AI, his ideas influenced the field’s early development.

John McCarthy

John McCarthy is often referred to as the “father of AI” because of his pivotal role in the field’s development. In 1956, he organized the Dartmouth Workshop, where he coined the term “artificial intelligence” and encouraged researchers to explore the creation of intelligent machines. McCarthy also developed the Lisp programming language, which became essential for AI research.

Marvin Minsky

Marvin Minsky was another influential figure in the early days of AI research. He co-founded the MIT Artificial Intelligence Laboratory and made significant contributions to computer vision and robotics. His work laid the groundwork for the development of AI systems capable of perception and learning.

These three individuals played crucial roles in shaping the field of AI and setting the stage for the subsequent evolution of artificial intelligence research. It’s important to note that many other researchers and contributors have made significant advancements in AI since its inception.

Who invented the first computing?

Charles Babbage, a British mathematician and inventor, conceptualized the first mechanical computing devices in the early 19th century. His “Difference Engine” and “Analytical Engine” laid the foundation for modern computing. The Difference Engine aimed to calculate polynomial functions, while the Analytical Engine was a general-purpose mechanical computer with features resembling today’s computers, including an arithmetic logic unit and memory storage. Ada Lovelace, Babbage’s collaborator, wrote the world’s first computer program for the Analytical Engine. Babbage’s visionary designs and concepts set the stage for the development of modern computers, making him a foundational figure in computing history.

Ancient Abaci and the Birth of Calculating Machines

Our journey begins in the distant past, where early civilizations sought ingenious ways to tackle complex calculations. The abacus, an unassuming yet revolutionary counting device that emerged around 2000 BCE in Mesopotamia, played a pivotal role.

The Abacus’s Significance

The abacus represented a monumental leap in human history, enabling the rapid processing of numbers and democratizing complex mathematics. Its universal appeal across cultures underscores the timeless need for computational tools. This simple yet ingenious device allowed ancient mathematicians to perform arithmetic operations with remarkable efficiency. In a world where every calculation was done by hand, the abacus transformed mathematics, commerce, engineering, and science, leaving an enduring legacy in computing.

Charles Babbage – The Unsung Father of Computing

Fast-forward to the 19th century, and we encounter Charles Babbage, an unsung hero often overshadowed by more modern tech luminaries.

The Analytical Engine

Charles Babbage’s Analytical Engine, conceived in the early 1830s, stands as a mechanical marvel that laid the groundwork for today’s computers. This ingenious device featured key components like the arithmetic logic unit (ALU) and a memory system, anticipating concepts now fundamental to computing.

Ada Lovelace – The World’s First Computer Programmer

While Charles Babbage’s work was groundbreaking, it was Ada Lovelace, a mathematician and writer, who infused it with visionary potential. Lovelace’s annotations on the Analytical Engine included the world’s first computer program, earning her the title of the first computer programmer.

Charles Babbage’s vision, embodied in the Analytical Engine, represented a pioneering leap towards modern computing. His innovative ideas sowed the seeds of the digital revolution we now enjoy. Through his mechanical marvel, Babbage unwittingly paved the way for a future where machines would compute, process, and change the world as we know it.

Alan Turing – The Codebreaker and Pioneer of Theoretical Computing

As we enter the 20th century, we encounter Alan Turing, an unsung hero whose contributions to computing transcend generations.

Turing Machines

Turing’s legacy is anchored in his conceptualization of the Turing machine, a theoretical construct that laid the foundation for studying algorithms and computability. This groundbreaking concept continues to shape the landscape of modern computer science, influencing everything from software development to artificial intelligence.

Codebreaking During World War II

Turing’s critical role in deciphering the German Enigma code during World War II stands as a testament to the practical applications of computing. His efforts played a vital role in the Allied victory and underscored the transformative potential of computational thinking on the world stage.

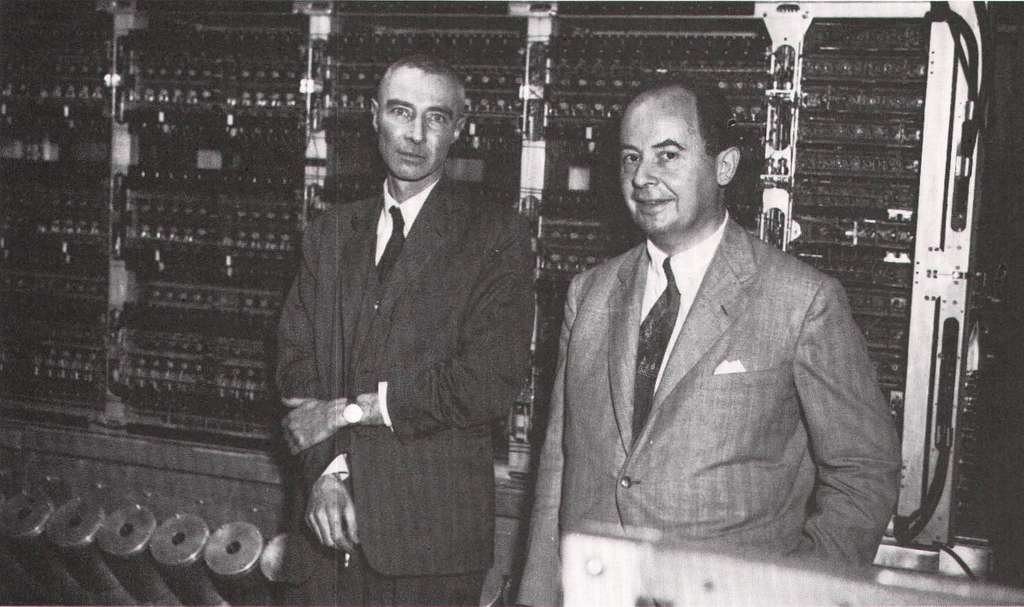

John von Neumann – Architect of the Modern Computer

The mid-20th century introduces us to John von Neumann, an unsung luminary whose work has left an indelible mark on modern computing.

Von Neumann Architecture

Von Neumann Architecture, a monumental achievement by John von Neumann, transformed the computing landscape. It introduced a groundbreaking design that separated memory from processing units, revolutionizing how computers operated. In this innovative architecture, data and instructions were stored in memory, creating a clear distinction between the storage of information and the execution of computational tasks. This separation streamlined the computing process, enabling more efficient and versatile data manipulation. Today, Von Neumann’s architectural innovation continues to serve as the cornerstone of contemporary computers, underpinning their ability to execute complex tasks and store vast amounts of data with remarkable speed and precision.

The Birth of the Microchip

As we navigate through history, we reach a pivotal moment with the invention of the microchip, a tiny yet monumental leap in computing technology.

Jack Kilby and Robert Noyce

In the late 1950s, two brilliant minds, Jack Kilby and Robert Noyce, independently pioneered the integrated circuit, more commonly known as the microchip. This transformative innovation forever altered the computing landscape by allowing the miniaturization of electronic components. The advent of the microchip was a watershed moment, paving the way for smaller, more powerful computers that would become ubiquitous in our daily lives. It was a quantum leap that marked the dawn of a new era in computing.

The Personal Computer Revolution

The 1970s ushered in the personal computer revolution, led by a cadre of unsung heroes and entrepreneurs.

Steve Jobs and Steve Wozniak

Apple’s co-founders, Steve Jobs and Steve Wozniak, introduced the Apple I and Apple II, igniting the personal computing revolution and forever changing the way we interact with technology.

Bill Gates and Microsoft

Bill Gates, alongside Paul Allen, co-founded Microsoft and developed the MS-DOS operating system, which evolved into Windows and played a pivotal role in democratizing personal computing. Bill Gates, a visionary entrepreneur, recognized personal computers’ potential to transform how we work and communicate. His relentless pursuit of innovation and his commitment to making computing accessible to all set the stage for Microsoft’s iconic success story.

The Microsoft Empire Expands

Throughout the 1980s and 1990s, Bill Gates continued to drive Microsoft’s expansion. Windows, the graphical user interface built on top of MS-DOS, became the dominant operating system, allowing millions of users to navigate their computers easily. Microsoft Office, a productivity software suite, further solidified the company’s influence in home and business computing. Gates’ strategic vision and keen business acumen positioned Microsoft as a global tech powerhouse.

Championing Philanthropy

In the early 21st century, Bill Gates shifted his focus from Microsoft to philanthropy. He established the Bill & Melinda Gates Foundation, one of the world’s largest charitable organizations, along with his then-wife Melinda. Their mission extended beyond computing, aiming to improve global healthcare, education, and access to information. Gates’ commitment to using technology for the betterment of humanity demonstrates that his influence in computing extends far beyond business success.

Legacy and Influence

Bill Gates’ impact on the personal computer revolution is immeasurable. His vision and dedication democratized computing, making it an integral part of our daily lives. From the early days of Microsoft to his philanthropic endeavors, Gates’ legacy continues to shape technology and its role in addressing some of society’s most pressing challenges.

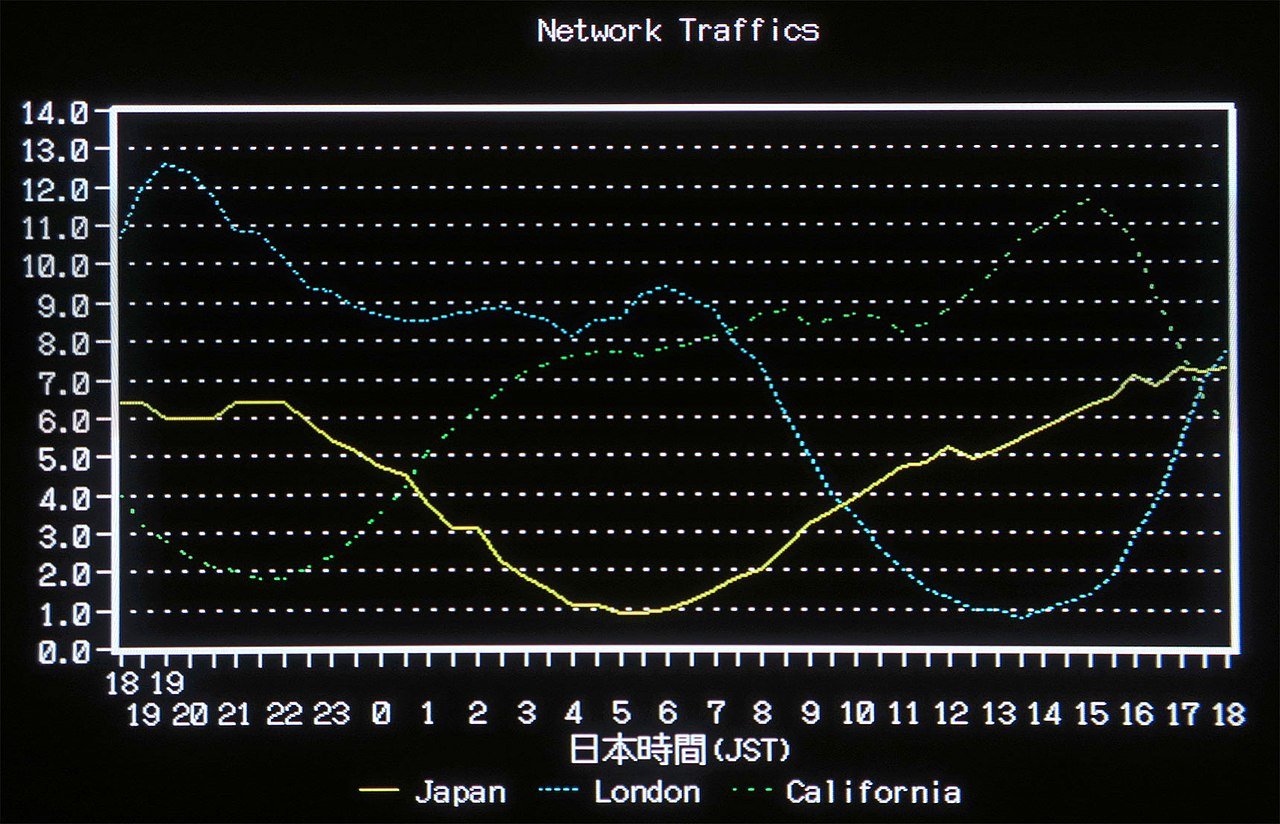

The Internet Age

Our journey through computing history lands us in the Internet age, where information and communication technologies reign supreme.

Tim Berners-Lee and the World Wide Web

Tim Berners-Lee’s groundbreaking invention, the World Wide Web, transformed the Internet into a dynamic global platform for communication and commerce and revolutionized how information is accessed and shared. His visionary contribution opened up new avenues for collaboration, knowledge dissemination, and business opportunities, shaping the digital landscape we rely on today.

The Digital Age and Beyond

Today, we inhabit the digital age, surrounded by many computing devices, from smartphones to supercomputers. Charles Babbage, the visionary who conceptualized the Analytical Engine, plays a crucial role in this narrative. The unsung heroes of computing, like Babbage, have sculpted this remarkable journey.

The Impact of Artificial Intelligence

Artificial intelligence, pioneered by Alan Turing and John McCarthy, continues to push the boundaries of computing, from natural language processing to self-driving cars. The relentless pursuit of intelligent machines echoes the spirit of innovation embodied by figures like Babbage, who laid the groundwork for this digital evolution.

Quantum Computing on Horizon

Quantum computing, spearheaded by visionaries like Richard Feynman and David Deutsch, promises to reshape computing, offering solutions to problems that once seemed impossible. Just as Babbage’s vision of the Analytical Engine heralded a new era, quantum computing holds the potential to usher in yet another transformative chapter in the ever-advancing world of computation.

Our journey through computing history reveals that the true fathers of computing were not just individuals but a collective of brilliant minds spanning centuries. From the humble abacus to the Internet age, each milestone was built upon the innovations of those who came before.

To fully appreciate the astounding computing power at our fingertips today, we must acknowledge the visionary pioneers like Babbage, Turing, and von Neumann, who laid the foundation for the digital revolution. Their indomitable spirit and unparalleled contributions will continue to inspire generations of innovators in the ever-evolving realm of computing.