In the ever-evolving landscape of Artificial Intelligence (AI), one of the most captivating and transformative fields is Natural Language Processing (NLP). As AI continues to reshape industries and redefine human-computer interactions, NLP stands as a cornerstone technology, enabling machines to comprehend, interpret, and generate human language.

Natural Language Processing, or NLP, represents a dynamic intersection of computer science, linguistics, and AI. Its primary objective is to equip machines with the ability to understand and work with human language, transcending the barriers of binary code and programming languages. NLP seeks to bridge the gap between human communication and computational capabilities, making it possible for AI systems to engage with users in a more human-like and meaningful manner.

The history of NLP is a testament to the relentless pursuit of unlocking the secrets of human language through technology. From its nascent beginnings in the 1950s, characterized by early attempts at language translation and rule-based systems, NLP has undergone a remarkable journey. It transitioned from rule-based approaches in the 1960s and 1970s to statistical methods in the 1980s and 1990s, laying the groundwork for machine learning techniques that gained prominence in the 2000s.

In recent years, NLP has witnessed an unprecedented revolution driven by the advent of deep learning and neural networks. Models like BERT and GPT-3 have achieved astounding feats in language understanding, pushing the boundaries of what AI can accomplish in areas such as sentiment analysis, question answering, and language translation.

This journey through the history of NLP and its convergence with AI represents an awe-inspiring testament to human ingenuity and the relentless pursuit of enhancing our relationship with technology. It is a journey that continues to unfold, promising a future where human-computer interactions are more seamless and natural than ever before, ultimately redefining the way we communicate with the machines that surround us. In this exploration of NLP’s evolution, we delve deep into its historical milestones, technological breakthroughs, and the transformative impact it has had on various aspects of our lives.

Table of Contents

Who is the father of NLP in AI?

The title of “father of NLP in AI” is often attributed to several individuals who made significant contributions to the field. While it’s challenging to pinpoint a single person as the sole father of NLP, there are two prominent figures who are often recognized for their pioneering work in the early days of NLP:

Alan Turing

Alan Turing, a British mathematician, computer scientist, and logician, is a foundational figure in the development of AI and NLP. In 1950, Turing proposed the “Turing Test,” a concept that laid the groundwork for thinking about machine intelligence and the ability of machines to understand and generate human language. Although Turing’s work was more theoretical in nature, his ideas about machine learning and language processing were groundbreaking.

Noam Chomsky

Noam Chomsky, an American linguist, cognitive scientist, and philosopher, contributed significantly to the understanding of language and its formal structure. His work on transformational grammar and the Chomsky hierarchy of formal grammars provided a theoretical framework that influenced the development of early NLP systems. Chomsky’s ideas on the generative grammar of languages played a crucial role in shaping the field of NLP.

It’s important to note that NLP is a multidisciplinary field that evolved over time with contributions from numerous researchers and scientists. While Turing and Chomsky are often acknowledged for their foundational ideas, NLP’s development has been a collaborative effort involving many individuals and teams who have collectively advanced our understanding of human language and its interaction with AI systems.

What is the theory behind NLP?

The theory behind Natural Language Processing (NLP) is grounded in the understanding of human language and the development of computational models and algorithms to enable machines to interact with and manipulate natural language effectively. At its core, NLP draws from linguistics, computer science, and artificial intelligence (AI) to bridge the gap between human communication and machine comprehension.

Linguistic Theories

NLP builds upon linguistic theories that describe the structure, syntax, semantics, and pragmatics of human language. These theories help NLP systems parse sentences, identify parts of speech, and extract meaning from text, allowing machines to understand the nuances of language.

Computational Linguistics

Computational linguistics is a subfield of NLP that focuses on creating algorithms and models to process and analyze language. Techniques such as parsing, stemming, and named entity recognition are employed to break down and analyze text.

Machine Learning

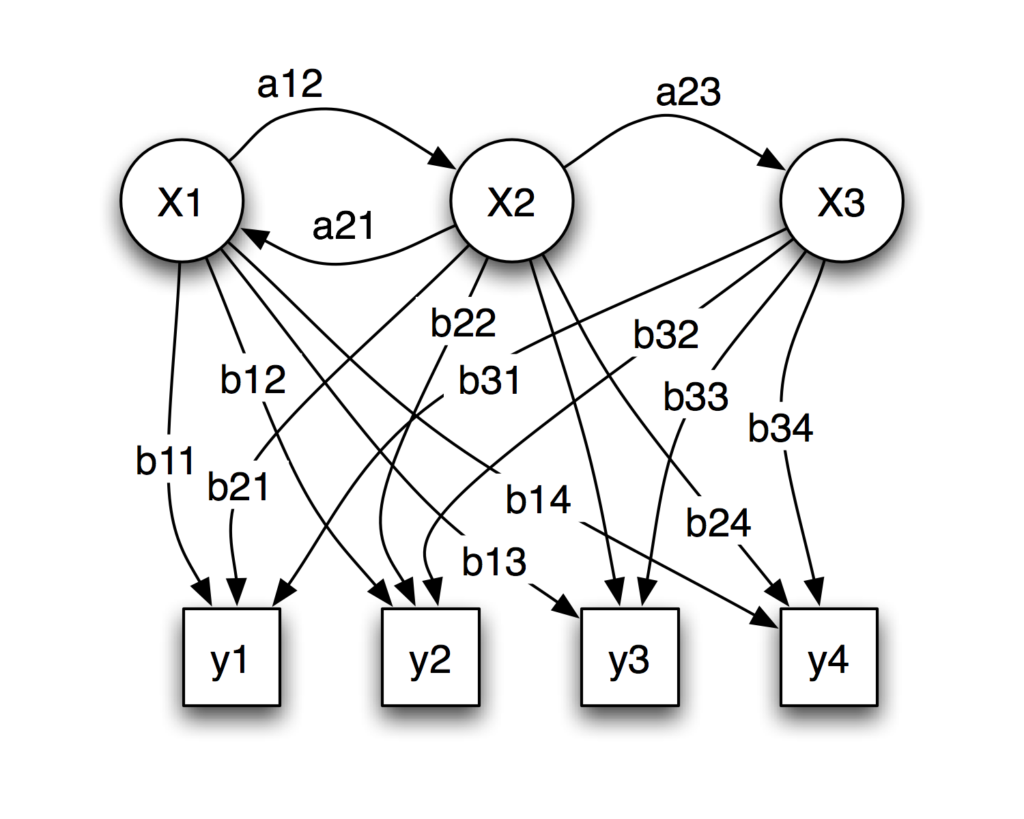

Machine learning plays a crucial role in NLP by enabling computers to learn patterns and associations in language data. Supervised and unsupervised learning techniques are used for tasks like text classification, sentiment analysis, and machine translation.

Deep Learning

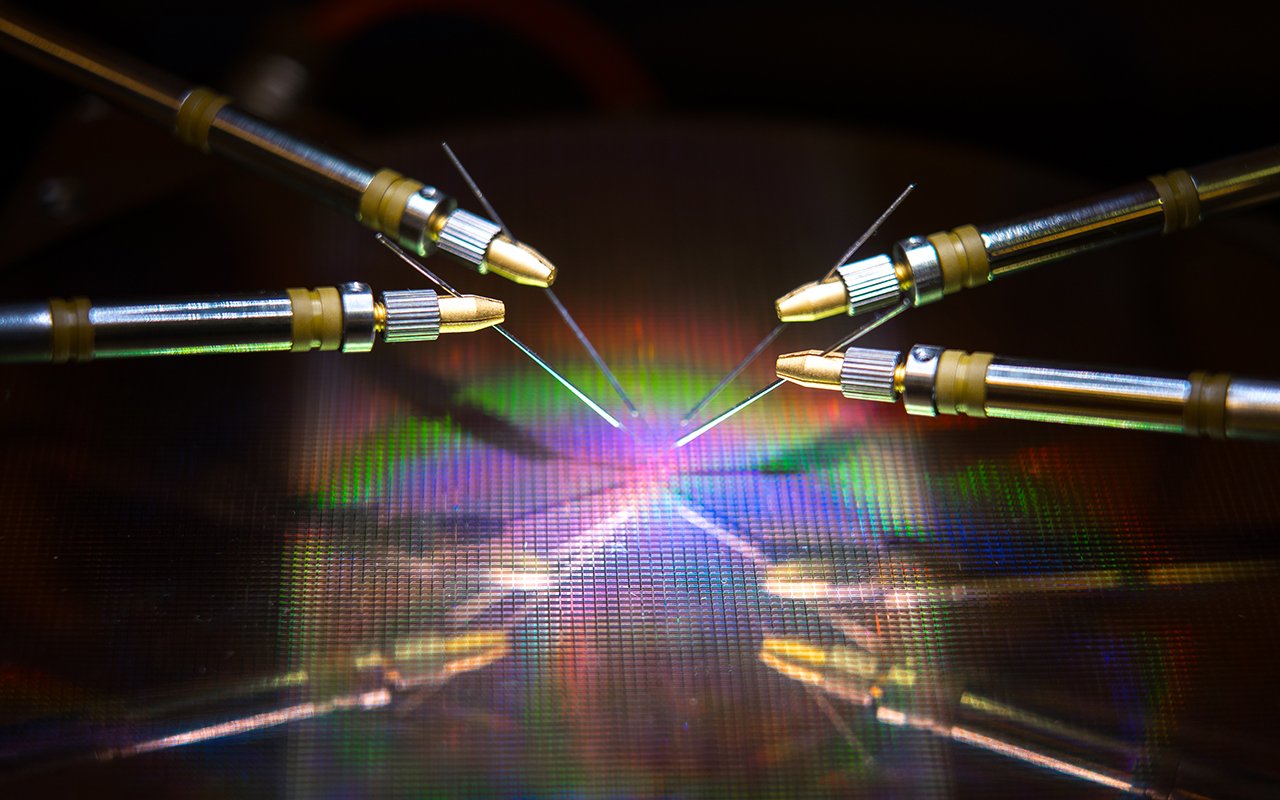

Deep learning, particularly neural networks, has revolutionized NLP. Models like the Transformer architecture have proven highly effective in capturing contextual information and relationships between words in large text corpora, enabling tasks such as language generation and understanding to reach unprecedented levels of performance.

Probability and Statistics

Probability models like Hidden Markov Models (HMMs) and statistical language models are used for various NLP tasks, including speech recognition and machine translation. These models help estimate the likelihood of word sequences and improve accuracy.

Semantics and Pragmatics

NLP models aim to understand not only the surface structure of language but also its underlying meaning. Semantic and pragmatic analysis allows NLP systems to infer intent, resolve ambiguities, and perform context-aware language processing.

In summary, the theory behind NLP combines linguistic insights, computational techniques, machine learning, and deep learning to create algorithms and models that empower machines to process, understand, and generate human language. It’s an interdisciplinary field that continues to evolve, bringing us closer to natural and effective human-computer communication.

What is the main goal of NLP?

The main goal of Natural Language Processing (NLP) is to bridge the gap between human communication and computer understanding. NLP is a multidisciplinary field at the intersection of computer science, artificial intelligence, and linguistics, with a primary objective to enable machines to comprehend, interpret, and generate human language in a manner that is both meaningful and contextually accurate.

At its core, NLP seeks to empower machines with the ability to understand the vast nuances of human language, encompassing diverse dialects, idiomatic expressions, and linguistic intricacies. This understanding goes beyond mere syntax and grammar; it includes grasping the semantics, pragmatics, and intent behind the words and sentences spoken or written by humans.

The ultimate aim of NLP is to create AI systems that can effectively communicate with humans in natural language, enhancing human-computer interactions across a wide spectrum of applications. This includes chatbots and virtual assistants that can provide instant customer support, language translation tools that break down language barriers, sentiment analysis to gauge public opinion, and even summarization algorithms that distill vast amounts of text into concise information.

Moreover, NLP plays a pivotal role in data analysis, information retrieval, and knowledge extraction from unstructured text data, making it an invaluable tool for businesses, researchers, and organizations seeking to harness the vast amounts of textual information available today.

The main goal of NLP is to empower machines with the capacity to understand and work with human language effectively, facilitating more natural and meaningful interactions between humans and computers, and unlocking new frontiers in AI-driven applications and data-driven decision-making processes.

History of NLP

The history of Natural Language Processing (NLP) is a fascinating journey that spans several decades and has witnessed significant advancements in technology and linguistic understanding. NLP is a field at the intersection of computer science, artificial intelligence, and linguistics, with the goal of enabling computers to understand, interpret, and generate human language. Here’s a detailed overview of the history of NLP:

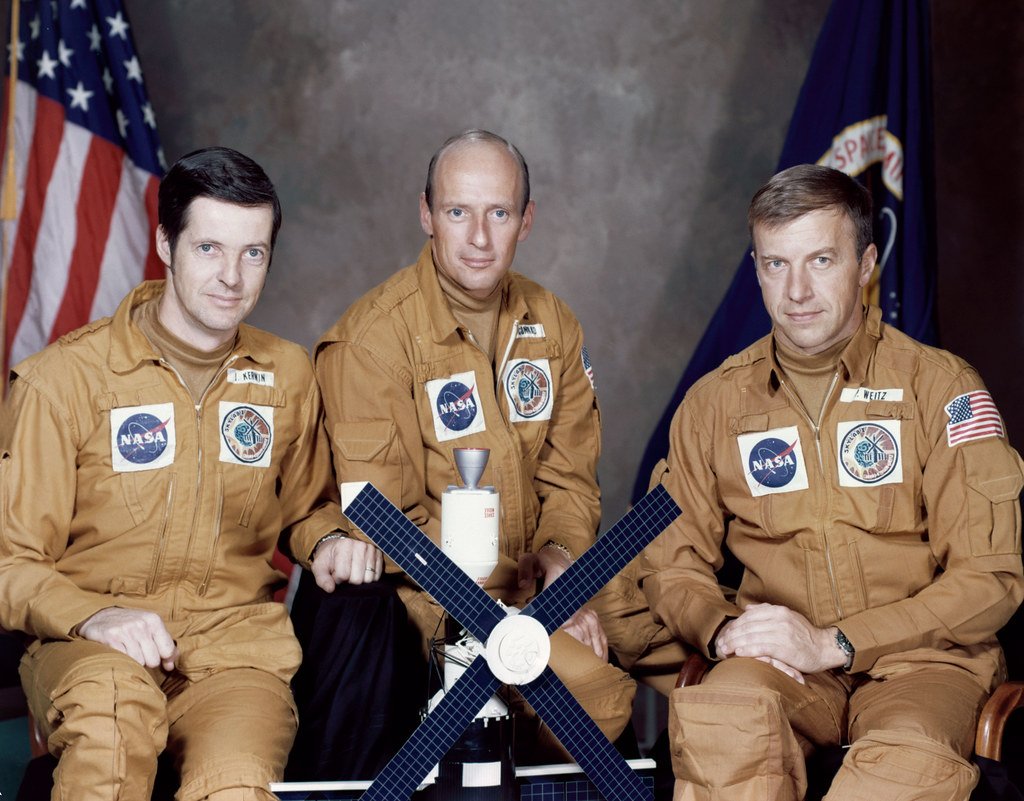

Early Beginnings (1950s-1960s)

The origins of Natural Language Processing (NLP) can be traced back to a pivotal moment in the mid-20th century when computer scientists and linguists embarked on an ambitious journey to make computers understand and interact with human language. This epochal exploration marked the birth of a field that would later revolutionize the way we communicate with machines.

In 1950, the legendary British mathematician and computer scientist, Alan Turing, proposed what has since become a cornerstone concept in AI and NLP: the “Turing Test.” This visionary idea posited that a machine could be considered intelligent if it could engage in a conversation with a human to the point where a human evaluator couldn’t distinguish it from another human participant. Turing’s test ignited a spark of curiosity and set the stage for decades of research and innovation in NLP.

The late 1950s and early 1960s witnessed another significant milestone in the development of NLP, as researchers embarked on groundbreaking experiments like the Georgetown-IBM experiment. This endeavor aimed to create early language translation systems capable of translating Russian text into English, pioneering the concept of automated language translation. These pioneering efforts laid the foundation for the subsequent evolution of NLP, propelling it from rudimentary translation tasks to the sophisticated language models and applications we see today.

NLP’s roots are firmly planted in the mid-20th century when visionaries like Alan Turing and dedicated researchers began to explore the untapped potential of computers to process and understand human language, ultimately setting the stage for the remarkable advancements and transformative impact of NLP in the decades that followed.

Rule-Based Approaches (1960s-1970s)

During the early days of Natural Language Processing (NLP), the field heavily depended on rule-based approaches. Linguistic experts painstakingly crafted grammatical rules and dictionaries tailored to specific languages. These rules governed how words and phrases could be structured and combined in sentences, forming the foundation of early NLP systems. While these rule-based systems had their limitations in handling the complexity and variability of natural language, they represented the first attempts to imbue computers with linguistic understanding.

A significant milestone in the history of early AI and symbolic reasoning was the development of the “General Problem Solver” (GPS) in 1963 by Allen Newell and Herbert A. Simon. GPS was a computer program designed to solve problems by representing them in a formal, logical language. While not exclusively an NLP system, it marked a critical step towards the development of AI systems that could reason and solve problems using symbolic representations, a concept that would later influence NLP research.

In the 1970s, Roger Schank’s pioneering work on natural language understanding systems, notably the “Script Theory,” laid the foundation for understanding the contextual meaning of language. The Script Theory introduced the idea that human understanding of language goes beyond syntax and grammar; it involves knowledge of the world and the ability to infer meaning based on context. This idea had a profound impact on NLP, paving the way for systems that could grasp the deeper, situational meaning of words and phrases.

These early developments in NLP, although rudimentary by today’s standards, were essential in shaping the field’s trajectory. They demonstrated the early fascination with enabling computers to process and interpret human language, setting the stage for the more sophisticated and data-driven approaches that would emerge in subsequent decades.

Statistical NLP (1980s-1990s)

The 1980s marked a significant turning point in the field of Natural Language Processing (NLP) as it witnessed a paradigm shift towards statistical methods. This shift represented a departure from the rule-based approaches that dominated the earlier decades. Statistical NLP brought a data-driven perspective to language understanding and paved the way for more accurate and context-aware language processing.

During this period, researchers began to explore probabilistic models, which utilized statistical probabilities to make sense of language. Prominent among these models were Hidden Markov Models (HMMs) and n-gram language models. HMMs were particularly influential in speech recognition and part-of-speech tagging, allowing machines to recognize patterns and transitions in speech signals and text.

One landmark development in the late 1980s was IBM’s Statistical Machine Translation (SMT) system. This system was instrumental in improving the quality of automated language translation. By leveraging statistical models, SMT took into account the likelihood of word sequences in both source and target languages, resulting in more accurate and contextually relevant translations.

The 1990s continued to build on the statistical foundation laid in the previous decade. Part-of-speech tagging gained prominence, enabling machines to assign grammatical categories to words in a sentence, and facilitating syntactic analysis. Additionally, statistical methods were increasingly applied to syntactic and semantic parsing, enabling computers to understand the structure and meaning of sentences.

Overall, the 1980s and 1990s were pivotal decades for NLP, ushering in an era of statistical approaches that significantly improved the field’s ability to handle the complexity and variability of natural language, setting the stage for further advancements in the years to come.

Machine Learning and Neural Networks (2000s-2010s)

The 2000s ushered in a new era for Natural Language Processing (NLP), marked by remarkable advancements in machine learning techniques that revitalized the field. Among these techniques were Support Vector Machines (SVMs) and Conditional Random Fields (CRFs), which brought a more data-driven and statistical approach to NLP tasks. Researchers and practitioners began to realize the potential of these algorithms in various language-related applications, including information retrieval, sentiment analysis, and part-of-speech tagging.

However, it was in the late 2000s that NLP experienced a seismic shift with the ascendance of deep learning techniques, particularly neural networks. This period witnessed the emergence of word embeddings such as Word2Vec and GloVe, which played a pivotal role in improving language modeling. These embeddings allowed words to be represented as dense vectors in continuous vector spaces, capturing semantic relationships between words based on their co-occurrence patterns in large corpora. This breakthrough in word representation fundamentally transformed how NLP systems understood and processed language.

In 2014, Google’s release of the Word2Vec model marked a watershed moment in NLP history. This model’s ability to capture intricate semantic associations between words without manual feature engineering opened new horizons for NLP researchers and developers. It laid the foundation for more sophisticated language models and paved the way for further innovation.

Subsequently, the release of neural network architectures like Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and Transformer models, including BERT and GPT, represented quantum leaps in NLP capabilities. These models harnessed the power of deep learning and attention mechanisms to tackle complex NLP tasks, including machine translation, sentiment analysis, and question answering, achieving unprecedented levels of accuracy and fluency.

The 2000s and beyond witnessed an NLP renaissance driven by machine learning and, later, deep learning techniques, which revolutionized how computers understand and generate human language, making NLP a dynamic and rapidly advancing field with far-reaching implications for technology, communication, and human-computer interaction.

Modern Era (2020s-Present)

The 2020s have marked a period of remarkable evolution and progress in the field of Natural Language Processing (NLP). One of the most significant developments during this era has been the emergence of pre-trained language models, exemplified by GPT-3 (Generative Pre-trained Transformer 3) and BERT (Bidirectional Encoder Representations from Transformers). These models represent a paradigm shift in NLP, showcasing the power of deep learning and large-scale neural networks. They have not only outperformed previous NLP systems but have also achieved human-level performance on various complex language tasks.

GPT-3, with its 175 billion parameters, has demonstrated the ability to generate coherent and contextually relevant text across a wide range of domains. BERT, on the other hand, with its bidirectional contextual understanding, has improved the accuracy of tasks like sentiment analysis and question answering.

These advancements have unlocked a plethora of possibilities for practical applications. Chatbots and virtual assistants, powered by these models, are more conversational and context-aware than ever before. They can answer questions, provide recommendations, and engage in meaningful dialogue with users.

Moreover, the 2020s have witnessed the rise of Multimodal NLP, which combines text and image understanding. This development allows machines to process and generate content based on both textual and visual information. Applications of Multimodal NLP range from automatic captioning of images to more sophisticated tasks like visual question answering, where systems can answer questions about the content of images.

In essence, the continued evolution of NLP in the 2020s has not only pushed the boundaries of AI but has also brought us closer to the realization of highly capable, conversational AI systems that can seamlessly integrate with our daily lives, enhance user experiences, and contribute to a wide array of industries, from healthcare to entertainment.

Throughout its history, Natural Language Processing (NLP) has demonstrated an exceptional capacity for evolution and adaptation. Initially grounded in rule-based systems, where linguistic experts manually crafted intricate grammatical rules and dictionaries, NLP embarked on a journey of continuous innovation.

The shift to statistical models in the latter part of the 20th century represented a significant breakthrough, as it allowed NLP systems to learn patterns from vast amounts of data. Hidden Markov Models, n-gram language models, and probabilistic approaches began to shape the landscape of NLP.

However, the most transformative phase arrived with the advent of deep learning and neural network-based techniques in the 21st century. This marked a paradigm shift, as models like Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and Transformers, including prominent ones like BERT and GPT, demonstrated unprecedented capabilities in language understanding and generation.

As NLP accelerates into the future, it holds the promise of even more sophisticated language capabilities. Ongoing research and development efforts are pushing the boundaries, addressing challenges like multilingualism, ambiguity, and context comprehension. NLP’s rapid evolution ensures it remains at the forefront of AI and language technology, poised to revolutionize communication, information retrieval, and data analysis in ways we can only begin to imagine.