Hard drives have come a long way since their humble beginnings. The history of hard drives spans over six decades, evolving from room-sized spinning disks to tiny chips of flash memory. In that time, data storage technology has transformed dramatically – not just in capacity and speed, but in form and function. This journey from the first clunky disk drives to today’s lightning-fast solid-state storage is a fascinating story of innovation. In this comprehensive overview, we’ll explore the origin of the hard disk drive, the evolution of data storage over the years, major milestones on the data storage timeline, the shift from HDD to SSD, and what the future holds for storage technology.

Despite the technical nature of storage devices, this history is deeply tied to everyday life – after all, hard drives (both old and new) hold our documents, photos, and the digital world we rely on. Let’s dive into the timeline of how data storage got from there to here.

Table of Contents

The Origin of Hard Disk Drives: IBM 305 RAMAC and the Birth of Storage

Every technology has to start somewhere. For hard disk drives (HDDs), the story begins in the 1950s. The very first hard disk drive was introduced by IBM in 1956 as part of the IBM 305 RAMAC computer system. RAMAC stood for Random Access Method of Accounting and Control, and it pioneered the idea of magnetic disk storage with random access (meaning data could be retrieved in any order, rather than sequentially as on tape).

The IBM 350 disk storage unit (circa 1956) being loaded onto an airplane via forklift. This first hard drive cabinet weighed over a ton and held only a few megabytes of data.

The IBM 305 RAMAC’s storage unit, known as the IBM 350 Disk File, is almost comical by today’s standards. It was the size of multiple refrigerators placed side by side, weighed over a ton, and stored a whopping 5 million characters (about 5 MB of data). Inside this massive cabinet were fifty 24-inch spinning disks stacked on a spindle. These metal platters, coated with magnetic material, spun at 1,200 rotations per minute. A mechanical arm with read/write heads moved between the platters to read or write data on the disk surfaces.

This first hard drive was a marvel of its time. It introduced the world to the concept of random access storage – the ability to go directly to the piece of data you want, rather than winding through reels of tape. Businesses could suddenly retrieve records in seconds instead of hours. Despite storing only about as much data as a single MP3 song, the IBM RAMAC’s impact was huge. It revolutionized data storage and set the stage for everything that followed, proving that disks could be used for fast, direct-access storage.

It’s interesting to note that IBM didn’t sell the 305 RAMAC’s disk system outright – it was so expensive and novel that it was leased to companies (at roughly $3,200 per month in 1950s dollars!). The early hard drive technology was delicate and costly, but it solved a critical problem of the era: the need for real-time data access for accounting and business. This breakthrough marked the birth of hard disk drives and opened the door to continuous improvements in hard disk technology.

Evolution of Data Storage: Hard Disk Drives Through the Decades

From that first IBM disk system in 1956, hard disk drives evolved rapidly over the following decades. Each era saw improvements in how much data could be stored, how physically large or small drives were, and how fast they worked. Let’s take a tour through the decades to see the evolution of data storage and how HDDs grew from megabytes to terabytes:

1960s: Early Improvements and New Ideas

The 1960s saw hard drives remain primarily large, mainframe-bound devices, but with steady improvements. By 1961, engineers developed the first disk drive heads that used air bearings – the read/write heads floated on a thin cushion of air above the spinning platter, instead of scraping along its surface. This innovation reduced wear and remains a principle in modern HDDs (today’s heads “fly” just nanometers above the platter). Drive capacities were inching up: some drives by the mid-60s could store tens of megabytes, which was enormous at the time.

A major innovation came in 1962 with IBM’s introduction of the IBM 1311, the first removable hard drive. It used disk packs – a stack of platters that could be removed and swapped. Each pack held about 2 million characters (~2 MB). This concept allowed users to have multiple disk packs for a drive, offering something like an early version of removable storage (imagine swapping large, pizza-box-sized “cartridges” of disks to load different data).

By the late 1960s, other companies entered the HDD market. Hitachi and Toshiba produced their first drives in 1967, and Memorex introduced drives compatible with IBM systems in 1968. Competition was heating up, which would accelerate innovation in the years ahead. Drives were still huge and used in corporate or government computing centers, but they were slowly getting more efficient.

1970s: Smaller, Faster, and the “Winchester” Drive

In the 1970s, hard drives started to shrink in physical size and grow in capacity, setting the stage for use beyond just big iron mainframes. In 1970, a company called General Digital (soon renamed Western Digital in 1971) was founded – destined to become one of the top hard drive makers in later years.

The big breakthrough of the 1970s was IBM’s Winchester drive, introduced in 1973. Officially called the IBM 3340, this drive had a code-name “Winchester” (after the famous rifle) because its designers initially targeted 30 MB of fixed storage plus 30 MB removable – a “30-30” design. The IBM 3340 Winchester ended up with a slightly different spec, but the name stuck. More importantly, it introduced key design features that became standard: low-mass lubricated heads and sealed drive assemblies. In other words, the platters and heads were sealed in a cleaner environment, and the heads would “land” on the platters safely when the disk stopped (instead of potentially crashing). This dramatically improved reliability. Winchester technology was so influential that for years, people referred to hard drives as “Winchester disks,” and the fundamental design didn’t change until the 2000s.

By the late 1970s, hard drives were still mostly found in big computers, but they were getting smaller in physical form. Around 1979, a pivotal shift occurred: a new company named Seagate Technology was founded by Alan Shugart (a former IBM engineer). Seagate and others were looking to bring hard drives to the burgeoning market of microcomputers (early personal computers). The stage was set for hard drives to leave the clean rooms of mainframes and enter the office, and eventually the home.

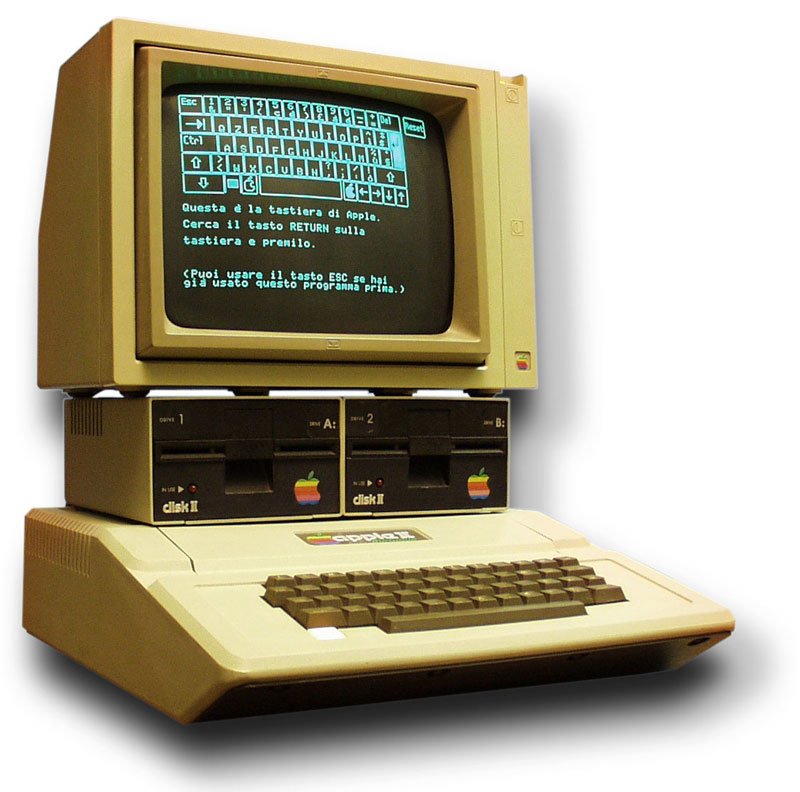

1980s: Hard Drives Enter the PC Era

The 1980s can be seen as the decade when hard drives truly entered the mainstream computing world. Early in the decade, a major milestone was reached: in 1980, IBM introduced the first hard drive to break the 1 GB barrier. The IBM 3380 disk system could store a then-astonishing 2.5 GB of data. However, like its predecessors, this was a refrigerator-sized machine (weighing about 500 kg!) and was used in corporate datacenters due to its cost and size.

Meanwhile, the personal computer revolution had started, and hard drives needed to adapt. In 1980, Seagate released the ST-506, the world’s first 5.25-inch hard disk drive, designed for microcomputers. This drive held 5 MB and was about the size of a thick book – tiny compared to IBM’s room-sized units, yet still large by today’s standards. The ST-506 and its successor the ST-412 (10 MB) became popular in early PCs and established the 5.25-inch form factor as a standard for a while. These drives used an interface that became the basis for the ST-506 interface (an early disk connection standard for PCs).

Another form factor improvement came soon after. In 1983, the first 3.5-inch hard drive was introduced by a company called Rodime. This smaller drive (about the size of a modern internal hard drive you might find in a desktop, though a bit thicker) had a 10 MB capacity. The 3.5-inch form factor eventually became the standard for desktop HDDs, while a 2.5-inch form factor emerged later for laptops (the first 2.5-inch drives appeared by the late 1980s). By making drives physically smaller, manufacturers enabled them to fit into compact personal computers and later portable computers.

During the 1980s, hard disk technology also improved in performance and standardization. New interfaces and standards were developed to connect drives to computers. In 1986, the industry standardized SCSI (Small Computer System Interface), which allowed multiple devices (including hard drives) to connect to a system using a common bus – popular in servers and higher-end workstations. Around the same time, the simpler and cheaper IDE (Integrated Drive Electronics) interface was created (the term “IDE” originally stood for Intelligent Drive Electronics), which integrated the drive controller onto the drive itself. IDE, later evolving into ATA/ATAPI, became the dominant interface for consumer PCs in the 1990s.

By the end of the 1980s, hard drives were a common (if somewhat expensive) component in personal computers. Capacities for PC drives grew from just a few megabytes at the start of the decade to around 100 MB or more by the decade’s close. Owning a hard drive was a luxury in early PCs (many PCs in the early 80s still ran from floppy disks due to HDD cost), but by 1990, having a hard disk was becoming essential for serious computer users.

1990s: Gigabytes for Everyone

If the 1980s introduced hard drives to the PC, the 1990s made them ubiquitous. This decade saw capacities soar into the gigabytes, and prices dropped enough that just about every new computer came with a hard drive. In 1991, hard drives finally reached the 1 GB capacity in a 3.5-inch form factor suitable for PCs (a notable drive was the IBM 1GB Microdrive in the early 90s). Storing hundreds of megabytes (and later several gigabytes) of data on a single disk drive revolutionized software and data usage – applications grew in features, and users could keep more files on hand.

Several key innovations and trends defined the 1990s for HDDs:

- Improved Disk Density: Drive makers introduced new techniques to pack data more densely on platters. For example, IBM pioneered the use of GMR (Giant Magnetoresistance) heads in 1997, which significantly improved how sensitively drives could read tiny magnetic bits. This helped enable drives like the IBM “Deskstar” series to reach tens of gigabytes by the end of the 90s.

- Faster Spindle Speeds: While typical desktop drives spun at 3,600 to 4,500 RPM in the early 90s, by mid-decade 7,200 RPM drives became common (Seagate’s Barracuda in 1992 was a 7,200 RPM unit). High-performance SCSI drives even hit 10,000 RPM (first introduced around 1996) and later 15,000 RPM (by 2000) for server applications, dramatically increasing data transfer rates and reducing seek times.

- Interface Evolution: The old IDE interface was upgraded to Enhanced IDE (EIDE) in the mid-90s and then to Ultra ATA standards, steadily increasing the bandwidth from 16 MB/s up to 33, 66, and then 100 MB/s by the end of the decade. SCSI interfaces likewise improved (Ultra SCSI, etc.), remaining the choice for servers. These interface improvements ensured that as drives got faster and stored more, the connection to the computer wasn’t a bottleneck.

- Birth of Portable Storage: We also saw interesting experiments like the IBM Microdrive (1999), a tiny 1-inch hard drive with 170 MB capacity designed for portable devices (like early digital cameras). While novel, such tiny HDDs were soon outpaced by another technology on the horizon – flash memory – but it showed how HDD tech tried to diversify.

By 1999, typical desktop hard drives might hold on the order of 10–20 GB, and the largest drives available reached around 40 GB. The hard drive had become an essential, ever-growing component of computing, enabling everything from robust databases to multimedia files like MP3s and digital videos to be stored at home.

2000s: From Terabytes to the Rise of SSDs

The 2000s continued the trend of rapid growth in capacity and saw the first terabyte barrier broken. Early in the decade, drives passed 100 GB. By the mid-2000s, we saw capacities of 500 GB (Hitachi released a 500 GB drive in 2005) and then 750 GB and 1 TB shortly after. In 2007, the first 1 terabyte hard drive hit the market (produced by Hitachi). This was a landmark – 1,000 GB on a single drive – which seemed enormous at the time. By the end of the decade, multi-terabyte drives were becoming available, and the growth didn’t stop there.

Several important developments in this decade include:

- Serial ATA (SATA): In 2003, the interface used by most PC hard drives shifted from the old parallel ATA (IDE) ribbon cables to Serial ATA (SATA). SATA cables were thinner and easier to manage, and the interface initially supported up to 150 MB/s (SATA 1.0), later 300 MB/s (SATA 2.0), and 600 MB/s (SATA 3.0 by late 2000s). SATA became the universal standard for consumer hard drives (and even early SSDs) due to its speed and convenience.

- Reliability and New Recording Tech: Drive makers introduced technologies to keep improving capacity. One breakthrough was perpendicular magnetic recording (PMR), introduced around 2005. Instead of storing magnetic bits longitudinally along the platter, PMR stores them vertically, which allowed bits to be placed closer together without interference. This change dramatically increased platter density, enabling the big jumps in GB capacity mid-decade. Other enhancements included better error correction and more buffer memory on drives.

- Enterprise Features: In the enterprise realm, features like RAID (Redundant Array of Independent Disks) became common to combine multiple HDDs for better performance or redundancy, and hot-swappable drive bays in servers allowed drives to be replaced without downtime. While not a drive technology per se, these trends showed how crucial HDDs were to data centers powering the internet age.

- The First Flash SSDs: Perhaps the biggest shift brewing in the 2000s was the emergence of Solid-State Drives (SSDs) as an alternative to HDDs. Early SSDs in the mid-2000s started to appear, especially for military or industrial use – they used flash memory (with no moving parts) to mimic a hard drive. In 2007, for example, the OLPC XO-1 educational laptop used a small flash-based storage instead of a hard disk, and some premium ultrathin laptops offered expensive SSD options of 32 GB or 64 GB. These early SSDs were very costly and low in capacity, but they hinted at a future where storage could be solid-state (much faster and more shock-resistant) rather than spinning magnetic disks. By the end of the 2000s, a few consumer SSD models were on the market, but HDDs still reigned for bulk storage due to the huge price difference.

By 2010, hard drives had reached capacities around 2 TB for consumers, and the familiar 3.5-inch and 2.5-inch form factors were everywhere (desktop PCs, laptops, external drives, etc.). The stage was set for a dramatic shift in the next decade, as HDD vs SSD became an important consideration for consumers and businesses alike.

Key Innovations in Hard Disk Technology and Design

Over the years, numerous hard disk technology innovations made hard drives more capacious, faster, and more reliable. Some of the key breakthroughs include:

- Areal Density Increases: The ability to pack more data onto the same size platter (areal density) has been a driving force. Early drives stored just a few thousand bits per square inch; modern drives store billions of bits per square inch. Innovations like GMR heads (1990s) and Perpendicular Magnetic Recording (2000s) were critical in squeezing more data into less space. More recently, techniques such as SMR (Shingled Magnetic Recording) write overlapping data tracks to cram even more data (used in some high-capacity drives in the 2010s), and upcoming methods like HAMR (Heat-Assisted Magnetic Recording) use lasers to temporarily heat the disk surface for even greater density.

- Form Factor and Size Reduction: The physical size of drives shrank dramatically. From 24-inch platters in the 1950s, common platter sizes went to 14-inch, then 8-inch in the 1970s, 5.25-inch in the 80s, 3.5-inch, and 2.5-inch (and even smaller 1.8-inch or 1-inch microdrives briefly). Smaller platters mean shorter travel for the heads and often faster access times. The 3.5-inch drive became the desktop standard and 2.5-inch for laptops. Smaller form factors also paved the way for portable external drives and various device integrations.

- Interface and Controller Advances: As mentioned, we moved from primitive custom interfaces to standard ones like IDE/ATA, SCSI, and then SATA. Each new interface brought higher data transfer rates and features (like hot swapping in SATA or command queuing for efficiency). Additionally, moving the drive controller logic onto the drive (as in IDE) and later adding onboard cache memory (modern drives often have 32 MB, 64 MB or more cache) improved performance by handling more tasks internally.

- Reliability Features: Early drives were very sensitive – a tiny speck of dust could crash the heads. Modern drives are sealed and often filled with filtered air or helium. In 2013, for example, HGST (a Western Digital subsidiary) introduced helium-filled drives. Helium inside the drive (instead of regular air) reduces drag on spinning platters and turbulence, allowing more platters to be stacked and running cooler. This enabled drives with 7 or 8 platters instead of the usual 5, increasing capacity (the first helium drive was 6 TB, larger than any air-filled drive at the time). Drives also got better at parking heads safely when powered off, resisting shocks, and monitoring their own health (with SMART diagnostics).

- Noise and Power Improvements: Innovations like fluid dynamic bearings (introduced in the late 90s) replaced noisy ball bearings in spindle motors, making drives quieter and more reliable. Power consumption was reduced, especially for laptop drives that implemented spin-down features when idle and other optimizations.

Each of these innovations in hard disk drive technology built upon the last, making HDDs a workhorse technology that has managed to continually improve. By the late 2010s, however, even as HDDs reached multi-terabyte capacities, another technology was rapidly taking over certain roles: solid-state storage. That brings us to one of the biggest shifts in the history of data storage.

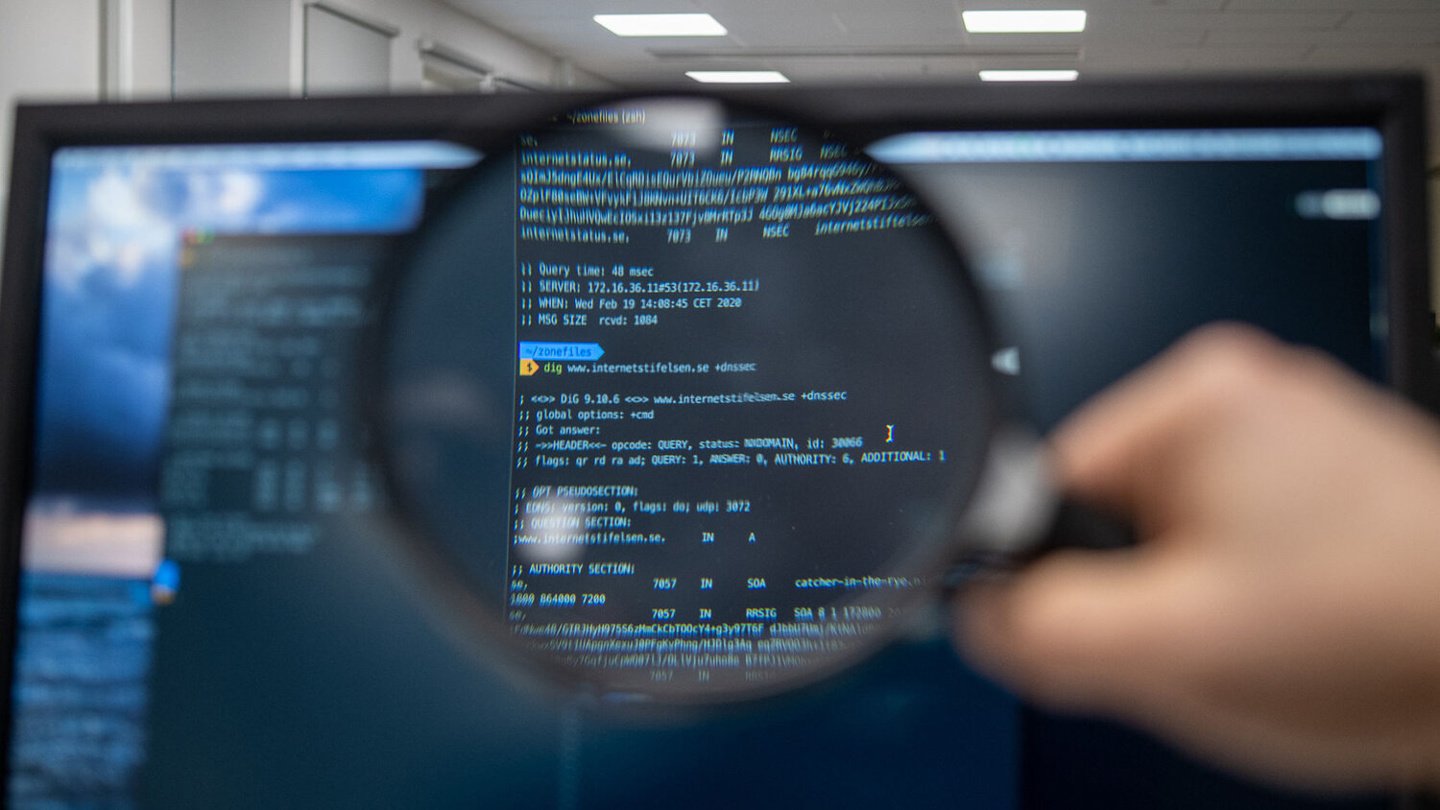

HDD vs SSD: The Shift to Solid-State Drives

One of the most significant turning points in storage history has been the transition from traditional magnetic hard disks (HDDs) to solid-state drives (SSDs). This shift has been driven by the very different strengths and weaknesses of the two types of hard drives. Let’s compare HDD vs SSD and see how the change unfolded:

- Technology: An HDD stores data on spinning magnetic platters with moving heads. It’s a mechanical device – sort of like a tiny record player inside your computer, with all the limitations of moving parts. An SSD, by contrast, has no moving parts. It stores data on flash memory chips (electronic transistors). In essence, an SSD is just a bunch of memory chips and a controller managing them, functioning more like a USB thumb drive (but much faster and more sophisticated).

- Speed: SSDs are blazingly fast compared to HDDs. Because there is no physical seeking or spinning needed, data on an SSD can be accessed almost instantly. An HDD might take several milliseconds to fetch data (due to spin-up and seek time), whereas an SSD can do it in microseconds. In practical terms, switching from an HDD to an SSD means a computer can boot in seconds rather than minutes, and programs open near-instantly. Early SSDs (circa late 2000s) were only moderately faster than the fastest HDDs, but modern SSDs (especially those using NVMe, which we’ll discuss shortly) are orders of magnitude faster in random access and high in throughput.

- Reliability and Durability: Without moving parts, an SSD is generally more shock-resistant – drop your laptop, and an SSD is less likely to fail than an HDD with delicate spinning disks. HDDs, however, have been refined over decades and can last a very long time if not mishandled. SSDs have a different limitation: flash memory can only be written a certain number of times (each cell wears out eventually). Modern SSD controllers mitigate this with wear leveling and spare cells, so for typical use an SSD’s lifespan is excellent – often outlasting the device it’s in. But for extremely write-intensive tasks, SSD lifespan must be considered.

- Capacity and Cost: Historically, HDDs have had the advantage in capacity and cost per GB. You could get multi-terabyte HDDs for a fraction of the cost of a much smaller SSD. This is why, during the 2010s, many desktops and laptops used a combination (or offered options): a smaller SSD for the operating system and programs (for speed) and a large HDD for storing files (for capacity). However, the gap is closing. SSD capacities have skyrocketed, and prices have fallen. By the early 2020s, consumer SSDs exceeding 1 TB became common and reasonably affordable, and even 4 TB or 8 TB SSDs are available (though HDDs still offer larger sizes like 10+ TB at a lower cost per TB, mostly used in servers and backups).

- Noise and Power: HDDs make noise – the whir of platters and click of heads – and they consume more power when spinning. SSDs are silent and usually use less power (which means better battery life for laptops and less heat).

The HDD vs SSD transition in the market really took off in the 2010s. Initially, enterprise data centers adopted SSDs for performance-critical tasks (like databases) despite cost, because of the huge speed gains. On the consumer side, SSDs started appearing in premium laptops (e.g., the MacBook Air in 2008 offered an SSD option) and enthusiast desktops. As prices dropped, they rapidly became mainstream. By the late 2010s, most new laptops were shipping with SSDs by default, and even gaming consoles moved to solid-state storage.

Impact on Consumers: For everyday users, moving to an SSD was one of the most noticeable upgrades. A PC with a regular HDD might take a couple of minutes to fully start up; the same PC with an SSD might do it in 20 seconds. Programs respond snappier, and there’s no frustrating grinding noise when the computer is under load. It enabled thinner and lighter devices too – without a bulky disk and shock-mounting, laptops could be slimmer. Smartphones and tablets, which always use flash memory, set an expectation for instant response that SSD-equipped PCs could finally meet.

Impact on Enterprise: In servers and data centers, SSDs changed the game in terms of input/output operations. A single SSD could often replace several hard drives in performance. This meant faster databases, quicker analytics, and the ability to virtualize more services on fewer physical drives. However, HDDs remained (and still remain) important for bulk storage. Cloud providers and backup services rely on the fact that HDDs offer lots of storage space cheaply. Many data centers use a tiered approach: fast SSDs for hot data and HDDs for colder, archival data.

It’s not that HDDs disappeared – far from it. Instead, the role of hard drives is shifting. HDDs continue to be used where capacity and cost matter more than speed (think storing large video archives, backup systems, etc.), while SSDs take over where performance is critical (operating system drives, active databases, etc.). We even saw hybrid solutions for a time: SSHDs or hybrid drives that combined a small SSD cache with a large HDD in one unit, though these became less common as pure SSD costs fell.

In summary, the last 10-15 years have seen a co-existence of HDDs and SSDs, with SSDs steadily encroaching on territory once dominated by spinning disks. It’s a bit like how electric cars are to gasoline cars – SSDs are the newer technology with clear advantages, but HDDs have a long history, ingrained use, and still hold an edge in a couple of metrics like cost per capacity (and thus they won’t vanish overnight).

Timeline of Hard Drive History: Major Milestones

To put everything in perspective, let’s step through a data storage timeline highlighting major milestones in the history of hard drives and storage technology. This timeline will give you a snapshot view of how things evolved:

- 1956 – The First Hard Drive: IBM 350 Disk File (part of the IBM 305 RAMAC) is introduced. It’s the world’s first hard disk drive, with 5 MB capacity on fifty 24-inch platters. Weighs over a ton and requires a small room to operate.

- 1961 – Airborne Heads: Development of air-bearing heads (heads that float on air) improves reliability. This year also sees drives like Bryant’s 4000 series reaching ~200 MB – huge for the time, albeit on very large disks.

- 1962 – Removable Disk Packs: IBM 1311 drive introduces removable disk packs, each about 2 MB. Users can swap packs to load different data, an early form of removable storage.

- 1970 – Birth of Western Digital: Western Digital is founded (as General Digital). The era of more competitors begins, breaking IBM’s early monopoly on disk drives.

- 1973 – Winchester Drive: IBM introduces the 3340 “Winchester” hard disk, a sealed drive with lubricated heads – a design that becomes the standard for decades. Represents about 60 MB of storage across two modules, and greatly improves reliability.

- 1979 – Seagate Founded: Seagate Technology, destined to be a major HDD manufacturer, is founded. This marks the rise of drives for the personal computer market.

- 1980 – First 5.25″ HDD & 1 GB Barrier: Seagate releases the ST-506, the first 5.25-inch hard drive (5 MB capacity), making HDDs viable for microcomputers. In the same year, IBM announces the 3380 disk system, the first to surpass 1 gigabyte (2.5 GB actually), though it’s a massive unit for mainframes.

- 1983 – First 3.5″ Hard Drive: Rodime introduces a 3.5-inch form factor HDD (about 10 MB), which will later become the standard drive size for desktop PCs.

- 1986 – SCSI Standardized: The SCSI interface (fast, multi-device interface mostly for servers/workstations) is standardized, while the IDE/ATA interface (simpler, for PCs) is also coming into use in this period (with the first IDE drives around 1985–86).

- 1991 – 1 GB Drives for PCs: Hard drives break the 1 gigabyte mark in a single 3.5″ unit suitable for personal computers. Drives in the early ’90s quickly go from hundreds of MB to a few GB in capacity.

- 1994 – Enhanced IDE: Western Digital introduces Enhanced IDE, improving drive interface throughput and allowing more drives to connect, which helps pave the way for larger and faster drives in PCs.

- 1997 – 10,000 RPM & GMR Heads: The first 10,000 RPM hard drives (for servers) are released by Seagate (Cheetah drives), boosting performance. IBM also releases the Deskstar 16GP with GMR head technology, greatly increasing areal density (16.8 GB capacity, huge at the time).

- 2000 – 15,000 RPM Drives: The fastest-spinning HDDs hit 15k RPM (again in the server market, for ultra-fast access). Also around this time, industry consolidation: Maxtor acquires Quantum’s HDD division (making Maxtor the largest at the time), and by mid-2000s Seagate will acquire Maxtor.

- 2003 – SATA Arrives: Serial ATA (SATA) interface launches, replacing parallel IDE in consumer PCs. SATA is faster and more convenient (thin cables, hot-plug capability). Initial drives use SATA 1.5 Gbit/s, soon moving to 3 Gbit/s (SATA II) and 6 Gbit/s (SATA III by 2009).

- 2005 – Perpendicular Recording: First drives using perpendicular magnetic recording ship (Toshiba introduces a 1.8″ drive with PMR, followed by others). This technology jump allows a big boost in capacity. This year also sees the first 500 GB HDD (Hitachi).

- 2006–2007 – 1 TB Barrier & SSD Emergence: In 2006, Seagate buys Maxtor (further consolidation). By 2007, Hitachi releases the first 1 TB hard disk drive, marking terabyte storage for consumers. Meanwhile, the first consumer SSDs start appearing around this time (though in small capacities like 32 GB and very expensive), and hybrid HDD/flash drives are experimented with.

- 2008 – Affordable SSDs & 1.5 TB HDD: The SSD market gets a boost as companies like Intel release somewhat affordable SSDs for enthusiasts. In HDD land, Seagate launches a 1.5 TB drive, continuing the capacity race.

- 2010 – 3 TB Drives: The first 3 terabyte hard drives come out (around 2010), but this also requires moving to new technologies (like 4K sector formatting, since older systems addressing couldn’t handle beyond 2.2 TB without changes).

- 2011 – Thai Floods & 4 TB Drives: A significant event – flooding in Thailand (a major hub of HDD manufacturing) causes global hard drive shortages, spiking prices. Some consumers, facing expensive HDDs, consider SSDs instead, boosting SSD adoption. Also, 4 TB hard drives debut around 2011 by Seagate.

- 2012 – Helium Drives: HGST announces the first helium-filled drives (ultimately a 6 TB drive by 2013). Helium technology opens the door to 8 TB, 10 TB, and beyond in subsequent years. By now, HDD makers are using tricks like helium and shingled recording to keep increasing capacity.

- 2013–2014 – Shingled Magnetic Recording (SMR): Drives with SMR technology appear, allowing higher density by overlapping tracks (Seagate’s 5 TB drive in 2013 used this). Meanwhile, standard drives hit 6 TB, 8 TB capacities by 2014 without SMR (using multiple platters and helium in enterprise models).

- 2015 – 10 TB HDD: Western Digital (via HGST) ships the first 10 TB hard drive, showing that double-digit terabytes are now achievable.

- 2010s – Rise of NVMe and Fast SSDs: While HDDs are growing in capacity, the mid-to-late 2010s see NVMe SSDs entering the scene. NVMe (Non-Volatile Memory Express) is a new interface/protocol for SSDs that connects via PCI Express, unlocking far higher speeds than SATA. By 2015–2016, the first consumer NVMe drives (like the Samsung 950 Pro) offer several times the speed of SATA SSDs, and by 2018 NVMe SSDs are common in performance PCs. SSD capacities also increase: for instance, Samsung released a 15 TB enterprise SSD in 2016, and by 2018/2019, 2TB consumer SSDs are relatively affordable.

- 2020 – 20 TB Drives & Beyond: HDD technology marches on in capacity. Around 2020, 18 TB and 20 TB hard drives (using technologies like energy-assisted recording) become available from Seagate and Western Digital for enterprise NAS and data center use. These often use SMR or new write methods to achieve such high density.

- 2021–2023 – 22+ TB and HAMR: Seagate and Western Digital introduce 22 TB hard drives and even 24 TB models (with help from techniques like ePMR and OptiNAND, which uses a bit of flash for metadata). Seagate also begins rolling out the first drives with HAMR (Heat-Assisted Magnetic Recording) technology, aiming for even larger capacities. By 2023, lab demos and limited releases of 30 TB+ drives are in sight. On the SSD front, consumer NVMe SSDs reach up to 4 TB or 8 TB, and enterprise SSDs surpass 30 TB in 2.5-inch form factor.

- 2025 and Beyond – New Frontiers: Looking ahead, HDD manufacturers plan for 30, 40, even 50 TB drives within a few years using HAMR/MAMR tech. SSDs might commonly hit 8–16 TB for consumers as 3D NAND flash improves. And new storage concepts (like storing data in DNA or holographic storage) are being researched for the more distant future.

This timeline shows how quickly storage has progressed from a 5 MB fragile disk to multi-terabyte robust devices – an incredible evolution of data storage in a relatively short time.

The Future of Storage: NVMe, Cloud Storage, and Beyond

Today, we stand at an interesting point in the history of data storage. Hard drives are still around and improving in certain ways, but the cutting-edge excitement is largely in solid-state and beyond. What does the future of storage look like as we move further into the 2020s and 2030s? Let’s explore a few key trends and emerging technologies:

NVMe and Modern SSDs: One of the most important current technologies is NVMe (Non-Volatile Memory Express). NVMe isn’t a type of storage media by itself, but rather a highly efficient interface/protocol designed specifically for flash memory. Unlike SATA (which was inherited from the era of slower mechanical drives), NVMe takes advantage of the parallelism of flash. NVMe SSDs, usually in the form of M.2 “gumstick” drives that plug directly into the motherboard, can reach astounding speeds. High-end NVMe SSDs today (using PCIe 4.0 or PCIe 5.0 interfaces) achieve read/write speeds over 5,000–7,000 MB/s, which is an order of magnitude faster than SATA SSDs (capped at ~550 MB/s) and dozens of times faster than hard drives. This means large files transfer in seconds and heavy applications or games load almost instantly. As the tech matures, NVMe drives are becoming standard in new PCs, and capacities are growing (we’re seeing 4 TB and 8 TB M.2 SSDs, and even larger in enterprise U.2 drives). The continued development of NVMe (with new versions of PCIe offering even more bandwidth) will likely make storage speed a lesser bottleneck in the future. Essentially, storage is catching up to the extremely fast CPUs and GPUs by removing older interface constraints.

Cloud-Based Storage: The way we use storage has also been altered by the rise of the cloud. Cloud storage doesn’t replace local drives entirely, but it complements them by storing data on remote servers accessible via the internet. Services like Dropbox, Google Drive, iCloud, and OneDrive (which gained popularity in the 2010s) mean that a lot of our files aren’t only on our personal hard drive; they’re also in data centers. For consumers, cloud storage offers convenience (access your files from anywhere, share easily) and a form of backup. For enterprises, cloud storage and cloud computing offer scalability – instead of buying more hard drives for your server room, you can just pay a cloud provider to store and manage data.

The impact on hard drives is two-fold: on one hand, cloud providers themselves buy a lot of storage (both HDDs and SSDs) to run these services, so in a way, the demand for drives is still there, just concentrated in data centers. On the other hand, individual consumers might care a bit less about having huge drives in their personal devices if a lot of data lives in the cloud. For example, a Chromebook laptop might have a very small SSD, expecting the user to store most files on Google Drive; smartphones too rely on cloud backups and streaming (notice how we no longer need to carry around as much local music or video, because we stream from services that store data remotely). So the future of storage usage is a mix of local and cloud. Likely we’ll continue to see this hybrid model, where critical and frequently used data is kept on fast local SSDs, and large archives or seldom-accessed content sits in the cloud (which itself might be on large HDD arrays or slower tiers).

Emerging Technologies (DNA Storage and more): Looking further ahead, entirely new ways to store data are under development. One of the most fascinating is DNA data storage. Researchers have been experimenting with encoding digital data into the sequences of synthetic DNA molecules. The appeal of DNA storage is its incredible density and longevity – in theory, you could store petabytes of data in a tiny pellet of DNA, and it could remain readable for thousands of years if kept in a cool, dry place. Imagine backing up an entire data center’s information in something you could hold on your fingertip! In practice, DNA storage is still very experimental. Writing data (synthesizing DNA) and reading data (sequencing DNA) is slow and expensive right now. But progress is being made; for instance, scientists have successfully stored images, text, even a short film in DNA as proofs of concept. By 2025, while we won’t be using DNA drives in our laptops, companies and labs are actively working on automating and improving the process. It’s conceivable that in a decade or two, DNA storage could be used for archival purposes – think cold storage of huge amounts of data (like all of Wikipedia, or library archives) in a very tiny, stable form.

Other futuristic storage techs include quantum storage and holographic storage, but those are even further from practical use. More immediately, we might see Phase-Change Memory or related tech (like Intel’s 3D XPoint/Optane, which appeared in late 2010s) bridging the gap between volatile memory and storage – though Optane drives were recently discontinued, the idea of super-fast non-volatile memory persists in research.

For hard drives (HDDs) themselves, the roadmap to stay relevant is mainly through capacity. As noted, technologies like HAMR (using heat to assist writing bits) and MAMR (Microwave-Assisted Magnetic Recording) are the industry’s bets to push HDDs to 30 TB, 50 TB, and beyond in the coming years. These will likely find homes in data centers where massive capacity is king. HDDs might also evolve by adding small doses of solid-state tech onboard (for example, Western Digital’s OptiNAND drives include a flash memory to store metadata and help with reliability and capacity). So we may see more hybrid approaches that squeeze the most out of spinning disks while leveraging a bit of flash where beneficial.

In summary, the current state-of-the-art storage for everyday computing is solid-state, particularly NVMe SSDs, providing tremendous speed. Cloud storage changes how we think about physical storage by abstracting it away to remote servers. And the future of storage likely holds even more radical shifts, from potentially storing data in molecules (DNA) to whatever breakthroughs come next. What’s certain is that our world’s data needs are only growing – and storage technology will continue to innovate to keep up, whether through evolutionary improvements or revolutionary new methods.

Conclusion: The Legacy and Future of Hard Drives in Computing

Reflecting on the entire history of hard drives, it’s clear that these storage devices have been a foundational technology in computing. From the moment IBM’s RAMAC first whirred to life in 1956, hard drives enabled something magical: quick access to vast (for the time) amounts of data. This capability underpinned the growth of modern computing – databases, operating systems, personal computing, the internet – all these things depended on the ability to store and retrieve data rapidly.

Through the decades, hard drives got smaller, cheaper, and hugely more capacious, which in turn allowed software and digital content to flourish. Think about it: early computers couldn’t even store a single high-quality image due to storage limitations. Today, we casually carry around thousands of photos and videos on our phones or have instant streaming access to media libraries – a direct result of advancements in storage. The role of hard drives in computing history is one of enabling progress: every time storage became less of a limiting factor, a new wave of digital innovation followed, whether it was the era of PC applications in the 90s, the explosion of multimedia in the 2000s, or the big data revolution of the 2010s.

Now we’re in the midst of another transition – moving from the familiar spinning disks to silicon chips for our storage needs. It’s an exciting time, as solid-state storage and other technologies unlock new possibilities (faster computing, smaller devices, etc.). Yet, the traditional hard drive isn’t truly gone; it has found its place in a complementary role and continues to serve us wherever large volumes of affordable storage are needed.

In everyday technology, whether you realize it or not, hard drives (HDDs or SSDs) are working behind the scenes. They store all your important documents, your family photos, your game libraries, and they keep the digital infrastructure of the world running – from bank records to cloud servers. As consumers, we’ve gone from worrying if we have enough space for a few documents to virtually not thinking about space as we store entire HD movie collections and beyond. And yet, we do keep needing more storage – new apps, higher resolution videos, VR environments, and things like machine learning datasets all drive the demand upward.

In conclusion, the story of hard drives – from spinning disks to solid-state storage – is a story of constant innovation. It illustrates how engineering ingenuity transformed a bulky 5 MB machine into a tiny card that can hold terabytes of data or an entire digital universe in the cloud. The legacy of the hard drive is one of enabling the information age, and its future, whether in the form of HDDs improving or newer technologies taking the torch, will continue to shape what’s possible in technology. As we look ahead, one thing is certain: our need to store data will keep growing, and storage solutions will keep evolving to meet those needs, in ever more remarkable ways. Here’s to the next chapter in the evolution of data storage – whatever it may be!