Artificial Intelligence (AI) stands as one of the most transformative and fascinating fields in technology. Over the decades, it has journeyed from mere conceptualization to astonishing accomplishments, forever altering the way we perceive and interact with machines. The evolution of AI is a captivating tale, marked by numerous milestones that have reshaped our understanding of intelligence and computational problem-solving.

In the mid-20th century, a group of pioneering minds convened at the Dartmouth Workshop in 1956, setting the stage for the birth of AI as a distinct field of study. This gathering of visionaries, including luminaries like John McCarthy and Marvin Minsky, laid the groundwork for a journey that would take us from the rudimentary beginnings of AI to the era of advanced machine intelligence we now witness.

One of the earliest breakthroughs in this journey was the creation of the Perceptron by Frank Rosenblatt in 1957. The Perceptron, a rudimentary neural network, offered a glimpse into the potential of machines to simulate human cognitive processes. Its development was a harbinger of the neural network revolution that would later reshape the AI landscape.

Fast forward to 1997, and we encounter Deep Blue, the IBM supercomputer that stunned the world by defeating world chess champion Garry Kasparov. This iconic moment demonstrated AI’s ability to excel in complex strategic games, underscoring the rapid progress in the field and foreshadowing the deep learning era that would follow.

The evolution of AI has been marked by an ever-accelerating pace of innovation, fueled by advancements in computing power, algorithms, and data availability. From expert systems in the 1970s to AlphaGo’s triumph over a Go world champion in 2016, AI has consistently pushed the boundaries of what was once thought possible.

In this exploration of the evolution of AI, we will delve deeper into these transformative milestones and unveil the remarkable journey of a field that has not only expanded the horizons of human-machine collaboration but also holds the promise of reshaping industries, solving complex problems, and shaping the future of our world. Join us on this remarkable odyssey through the annals of AI history, as we trace the path from the humble Perceptron to the awe-inspiring triumph of Deep Blue and beyond.

Table of Contents

What are the key historical developments in the field of AI?

Key historical developments in the field of AI include:

Dartmouth Workshop (1956)

The birth of AI as a field when researchers convened to discuss and define its principles.

The Logic Theorist (1955)

The first computer program capable of proving mathematical theorems, showing AI’s problem-solving potential.

General Problem Solver (1957)

A program that expanded on theorem proving, tackling a broader range of problems using symbolic logic.

The Perceptron (1957)

The earliest neural network, paving the way for modern machine learning.

Lisp Programming Language (1958)

Developed for AI, Lisp became crucial for symbolic reasoning.

Shakey the Robot (1966)

A mobile robot with reasoning abilities, bridging AI and robotics.

Expert Systems (1960s-1970s)

Dendral and MYCIN exemplified AI’s ability to emulate human expertise in specialized domains.

Backpropagation Algorithm (1986)

A significant advancement in training artificial neural networks, facilitating deep learning.

IBM’s Deep Blue (1997)

Deep Blue’s victory over Garry Kasparov demonstrated AI’s prowess in strategic gaming.

AlphaGo (2016)

DeepMind’s AlphaGo’s win against Lee Sedol highlighted AI’s capabilities in complex, intuitive domains like Go. These milestones collectively shaped AI’s evolution into the diverse and influential field it is today.

Who is the father of AI?

The title “father of AI” is often attributed to John McCarthy. He was a pioneering computer scientist and mathematician who played a central role in the development and popularization of artificial intelligence as a field of study. McCarthy is best known for organizing the Dartmouth Workshop in 1956, a seminal event that marked the birth of AI as a distinct field.

Throughout his career, McCarthy made numerous significant contributions to AI research, including the development of the Lisp programming language, which became a cornerstone of AI programming due to its flexibility and suitability for symbolic reasoning. He also worked on early AI programs like the Logic Theorist and the General Problem Solver, which demonstrated the feasibility of automated problem-solving and logical reasoning.

John McCarthy’s visionary work and leadership laid the foundation for the AI research community and set the stage for the continued advancement of artificial intelligence technology.

10 Milestones in the History of AI

The history of artificial intelligence (AI) is filled with significant milestones that have shaped the field into what it is today. Here are 10 key milestones in the history of AI:

Dartmouth Workshop (1956)

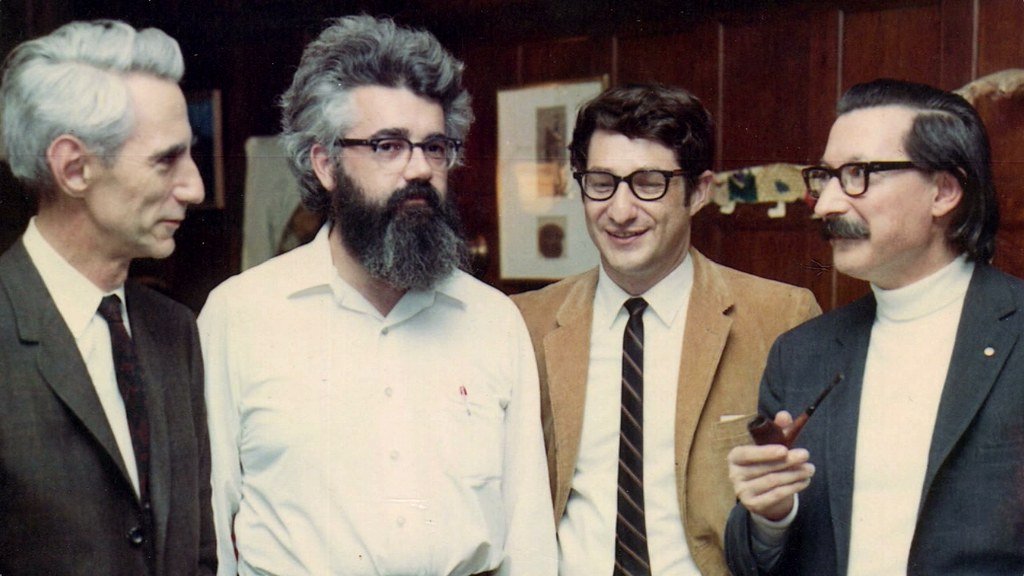

The Dartmouth Workshop of 1956 is widely recognized as the foundational moment in the birth of Artificial Intelligence (AI). Organized by a quartet of visionary pioneers in the field—John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon—this historic event convened a select group of researchers, each with a shared vision: to explore the uncharted territory of machine intelligence.

During the six-week-long workshop, these intellectual trailblazers engaged in discussions, debates, and collaborative endeavors that laid the cornerstone for AI as a formal discipline. They aimed to define AI, delineate its goals, and outline the potential avenues of research and development. The workshop’s attendees grappled with profound questions surrounding problem-solving, machine learning, and symbolic reasoning.

This pivotal event not only set the stage for decades of AI research and innovation but also fostered a sense of community among those who believed in the power of machines to replicate human-like cognitive abilities. The Dartmouth Workshop’s enduring legacy is its role in shaping AI as an academic and practical field, propelling humanity toward a future where machines would augment our intelligence and capabilities in ways previously unimaginable.

The Logic Theorist (1955)

Developed by Allen Newell and Herbert A. Simon in 1955, the Logic Theorist marked a watershed moment in the history of artificial intelligence (AI). This groundbreaking computer program was the first of its kind to demonstrate the capability to prove mathematical theorems, a task that was previously considered exclusive to human intelligence.

The Logic Theorist operated by employing symbolic logic and heuristic search techniques to explore and manipulate logical expressions. It mimicked human problem-solving abilities by representing mathematical problems as logical statements, then systematically applied rules and deductions to arrive at solutions. This was a groundbreaking revelation, as it challenged the prevailing notion that only human cognition could tackle such abstract and complex tasks.

The Logic Theorist’s success not only showcased the potential of AI but also set a precedent for the development of subsequent AI systems. It sparked enthusiasm within the AI research community and inspired further exploration into simulating human thought processes through computational means. In essence, the Logic Theorist laid a critical foundation for the evolution of AI, illuminating the path towards creating intelligent machines capable of emulating human problem-solving abilities.

General Problem Solver (GPS) (1957)

General Problem Solver (GPS), a creation of AI pioneers Allen Newell and Herbert A. Simon, represented a significant leap forward in the development of artificial intelligence during the late 1950s. Building on the foundation laid by their earlier work, including the Logic Theorist, GPS was designed to be a versatile problem-solving program that could tackle a broader spectrum of problems.

What set GPS apart from its predecessors was its ability to represent problems using symbolic logic. This approach allowed GPS to break down complex issues into smaller, more manageable components, enabling it to explore various problem-solving paths systematically. By applying a set of predefined rules and logical reasoning, GPS could generate solutions to a wide range of problems, from mathematical theorems to puzzles and logical reasoning tasks.

GPS’s symbolic representation of problems and its problem-solving capabilities laid the groundwork for future AI systems, including expert systems and modern-day machine learning algorithms. Its innovative approach to problem-solving marked a pivotal moment in the history of AI, showcasing the potential for computers to mimic human-like reasoning and problem-solving abilities. This early work continues to influence and shape the field of AI to this day, driving progress in areas as diverse as natural language processing, robotics, and autonomous decision-making.

The Perceptron (1957)

Created by Frank Rosenblatt, the perceptron stands as a pioneering milestone in the history of artificial intelligence. Developed in 1957, it was among the earliest attempts to simulate human neural processes using machines. At its core, the perceptron is a simplified model of a biological neuron, designed to make binary decisions. Its significance lies not only in its conceptual simplicity but in the profound implications it has for the future of AI.

The perceptron marked the initial foray into the realm of artificial neural networks, which would later evolve into the sophisticated deep-learning models we have today. This simple building block laid the foundation for modern machine learning and neural networks by introducing the concept of training a model on labeled data to improve its performance.

While the perceptron had limitations, such as its inability to solve problems that weren’t linearly separable, it served as a catalyst for further research and innovation. Over the decades, its legacy has grown, and its influence on the field of AI remains undeniable, shaping the landscape of modern technology and powering applications ranging from image and speech recognition to autonomous vehicles and natural language processing.

Lisp Programming Language (1958)

Developed by John McCarthy in 1958, the programming language Lisp (short for “LISt Processing”) emerged as a groundbreaking innovation in artificial intelligence (AI). Its significance lies not only in its historical context but also in its enduring impact on AI research and computer science as a whole.

Lisp gained widespread adoption in AI research primarily because of its unique features and capabilities. Unlike many other programming languages of its time, Lisp was designed with a focus on symbolic processing. This made it exceptionally well-suited for tasks involving complex symbolic reasoning, a fundamental component of AI systems. Lisp’s ability to manipulate symbolic expressions effortlessly allowed AI researchers to represent knowledge and create sophisticated rule-based systems, known as expert systems, which were pioneering applications of AI in various domains.

Furthermore, Lisp’s flexibility and dynamic nature made it an ideal choice for experimenting with new AI concepts and rapidly prototyping AI algorithms. Its garbage collection and support for recursion were particularly advantageous for handling complex data structures and managing memory efficiently—a crucial aspect of AI programming.

As AI continued to evolve, Lisp remained a foundational language, playing a significant role in the development of early AI systems, natural language processing, robotics, and expert systems. Although newer programming languages and technologies have emerged over the years, Lisp’s legacy endures, serving as a testament to its enduring relevance in the history and ongoing evolution of artificial intelligence.

Shakey the Robot (1966)

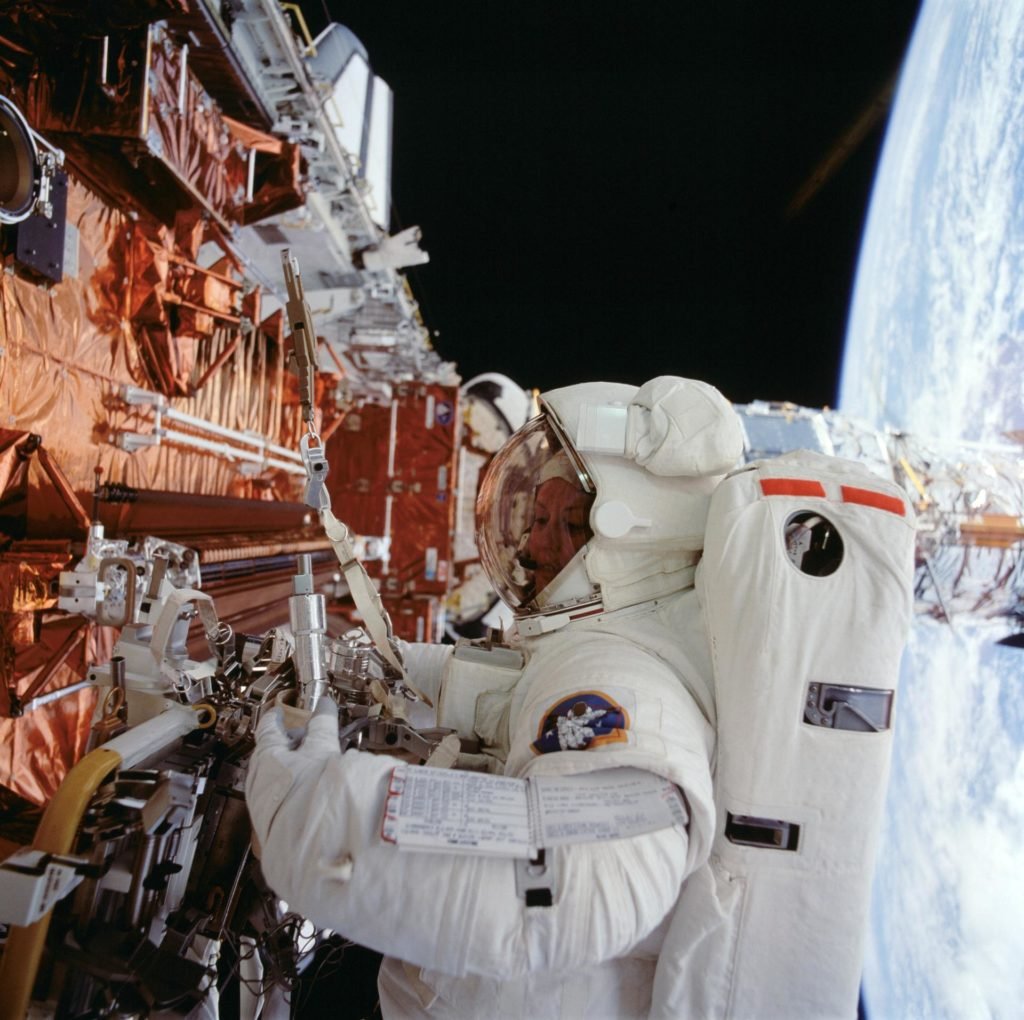

Developed at Stanford Research Institute in the late 1960s, Shakey the Robot was a groundbreaking achievement that heralded a new era in robotics and artificial intelligence integration. Shakey wasn’t just another mechanical automaton; it was a pioneering robot with a remarkable ability to reason about its actions and adapt to its environment.

Shakey’s significance lay in its capacity to perform what we now call “symbolic reasoning.” Equipped with a range of sensors, including cameras and bump sensors, Shakey could perceive its surroundings and interpret the visual information it collected. It used this information to build an internal representation of its environment, complete with objects and obstacles. This cognitive mapping allowed Shakey to plan and execute actions autonomously, navigating around obstacles to achieve its goals.

This groundbreaking approach marked a significant departure from earlier, more rigid robotic systems that relied on pre-programmed sequences of actions. Shakey’s ability to reason about its actions in real-time opened the door to more flexible and adaptive robotic systems, setting the stage for the development of modern autonomous robots capable of navigating complex, dynamic environments.

In essence, Shakey was a trailblazer that helped bridge the gap between AI and robotics, paving the way for the intelligent, problem-solving robots we see in various applications today, from manufacturing and healthcare to space exploration and autonomous vehicles. Its legacy continues to influence the development of AI-driven robotics, reminding us of the transformative power of interdisciplinary research and innovation.

Expert Systems (1960s-1970s)

The development of expert systems, exemplified by pioneering projects like Dendral and MYCIN in the 1960s and 1970s, marked a watershed moment in the evolution of artificial intelligence. These systems showcased the immense potential for AI to emulate and even surpass human expertise in specialized domains, effectively bridging the gap between human knowledge and machine intelligence.

Dendral, primarily focused on chemistry, was a trailblazing expert system that demonstrated the ability of AI to solve complex problems in a highly specialized field. It could analyze chemical mass spectrometry data and infer the possible molecular structures of organic compounds. This feat was a testament to AI’s capacity to mimic human reasoning and problem-solving processes.

MYCIN, on the other hand, was designed for medical diagnostics, specifically in the domain of infectious diseases. It leveraged a knowledge-based approach, emulating the decision-making processes of medical experts to diagnose bacterial infections and recommend suitable antibiotic treatments. MYCIN’s success not only showcased the potential of AI in healthcare but also paved the way for the development of medical expert systems that continue to aid healthcare professionals to this day.

These early expert systems laid the foundation for AI’s future applications in numerous specialized fields, including law, finance, and engineering. They demonstrated that AI could capture and utilize human expertise to solve complex problems, offering a glimpse into a future where AI systems could complement and enhance human capabilities across various domains, thereby revolutionizing industries and improving our quality of life.

Backpropagation Algorithm (1986)

The development of the backpropagation algorithm, spearheaded by Geoffrey Hinton and his collaborators, marked a pivotal moment in the history of artificial intelligence and, more specifically, the realm of neural networks. This algorithm, introduced in the 1980s, revolutionized the training process of artificial neural networks, opening doors to significant advancements in deep learning.

Before backpropagation, training neural networks was a formidable challenge. These networks comprise layers of interconnected artificial neurons, and adjusting the connections between them to enable accurate predictions or classifications was a complex and time-consuming task. Backpropagation introduced an elegant solution by enabling networks to learn from their mistakes.

This algorithm essentially works by iteratively adjusting the network’s internal parameters in response to the difference between its predictions and the actual outcomes (i.e., the error). By propagating this error backward through the layers of the network, it was possible to efficiently update the connection weights. As a result, neural networks could learn complex patterns and representations, becoming more capable of handling real-world data.

The impact of backpropagation was profound. It enabled the development of deeper and more complex neural network architectures, leading to significant breakthroughs in various AI applications, such as computer vision, natural language processing, and speech recognition. The advent of deep learning owes much of its success to this algorithm, making it a cornerstone in modern AI research and technology. Today, deep learning models, empowered by backpropagation, continue to drive innovations across industries and shape the future of AI.

IBM’s Deep Blue (1997)

IBM’s Deep Blue’s historic victory over world chess champion Garry Kasparov in 1997 was a watershed moment in the history of artificial intelligence, serving as compelling evidence of AI’s capacity to excel in complex strategic games. The six-game match captivated the world, pitting human intellect against machine precision. Kasparov, considered one of the greatest chess players of all time, was dethroned by a computer, fundamentally altering perceptions about the potential of AI.

Deep Blue’s triumph was a result of meticulous programming and an astonishing computational capacity that allowed it to evaluate 200 million positions per second, a feat beyond human capability. The computer’s ability to analyze vast sets of data and make optimal decisions in a matter of seconds highlighted the strategic prowess AI could bring to the table.

This momentous event demonstrated that AI, when equipped with the right algorithms and computational resources, could tackle problems that were once deemed the exclusive domain of human intelligence. Deep Blue’s victory marked a critical turning point, inspiring further exploration of AI’s potential in diverse fields beyond chess, including finance, healthcare, and autonomous vehicles, and solidifying its place in the annals of technological history.

AlphaGo (2016)

Developed by DeepMind, a subsidiary of Google, AlphaGo’s historic victory over the world Go champion, Lee Sedol, in 2016 represented a groundbreaking achievement in the evolution of AI. The significance of this event cannot be overstated, as it showcased AI’s ability to conquer one of the most complex and deeply strategic board games ever devised.

Go, with its nearly infinite number of possible moves, presents a formidable challenge even for human grandmasters. Its reliance on intuition and pattern recognition made it a particularly daunting test for AI. AlphaGo, however, employed a combination of advanced techniques, including deep neural networks and reinforcement learning, to master the game.

The victory of AlphaGo over Lee Sedol was not merely a demonstration of AI’s computational prowess but also a testament to its adaptability and capacity to learn from human expertise. It forced a reevaluation of AI’s capabilities and spurred new interest in the field, leading to further advancements in machine learning, neural networks, and reinforcement learning.

This milestone marked a turning point, not only in board games but also in practical applications of AI in fields such as healthcare, finance, and autonomous vehicles. AlphaGo’s triumph underscored the potential for AI to tackle complex, real-world problems that were once considered insurmountable, heralding a future where AI-driven solutions could reshape various aspects of our lives.

These milestones, although significant, only scratch the surface of AI’s intricate and ever-expanding history. The journey of artificial intelligence has been a continuous stream of innovation, marked by breakthroughs that have reshaped our understanding of computation, learning, and problem-solving. As computing power surged and algorithms became more sophisticated, AI evolved from early symbolic logic and expert systems to the neural networks and deep learning algorithms we see today. The integration of AI into diverse domains such as healthcare, finance, transportation, and beyond has had a profound impact, revolutionizing processes and decision-making. AI-driven technologies like natural language processing, computer vision, and reinforcement learning have unlocked unprecedented opportunities and solutions for complex challenges. The pace of change is relentless, and the future promises further astonishing advancements, making the study and application of AI an exciting and pivotal endeavor in the ongoing evolution of technology and human progress.