In the ever-evolving landscape of technology and artificial intelligence (AI), neural networks have emerged as a transformative force. These intricate systems of interconnected nodes, inspired by the complex web of neurons in the human brain, have propelled AI research and applications to unprecedented heights. Among the various types of neural networks, Recurrent Neural Networks (RNNs) stand out for their ability to process sequential data, making them essential for tasks like natural language processing, time series prediction, and beyond.

In recent years, organizations such as OpenAI have been at the forefront of AI innovation. OpenAI, founded with the mission to ensure that artificial general intelligence (AGI) benefits all of humanity, has spearheaded numerous groundbreaking initiatives. From their influential research papers to the development of state-of-the-art language models like GPT-3, OpenAI has been pivotal in advancing the frontiers of AI and neural networks.

This introduction sets the stage for an exploration of the dynamic interplay between AI, neural networks, and RNNs, with a particular focus on the contributions and impact of OpenAI. As we delve deeper into these domains, we will uncover the ways in which neural networks, with their remarkable capacity to learn from data and adapt to diverse tasks, have ushered in a new era of AI capabilities.

From self-driving cars that use neural networks for image recognition to virtual assistants that understand and respond to natural language, the influence of AI and neural networks in our daily lives is palpable. Understanding the mechanisms that underpin these technologies, such as RNNs, sheds light on the inner workings of AI systems and their potential for transformational change across industries.

In this exploration, we will embark on a journey through the history, principles, and practical applications of neural networks and RNNs, guided by the visionary work of organizations like OpenAI. Together, we will unravel the intricate tapestry of AI and neural networks and discover how they are reshaping the way we interact with technology and perceive the future.

Table of Contents

Who introduced neural network in AI?

Warren McCulloch and Walter Pitts introduced the concept of neural networks in the field of artificial intelligence in their groundbreaking 1943 paper, “A Logical Calculus of the Ideas Immanent in Nervous Activity. ” McCulloch, a neuroscientist, and Pitts, a logician, collaborated to develop a formal model of a simplified artificial neuron.

In their work, McCulloch and Pitts sought to describe the basic functioning of biological neurons in mathematical and computational terms. They proposed a simple model of a neuron that could take binary inputs, process them using logical operations, and produce binary outputs. This model laid the foundation for what we now refer to as an artificial neuron or perceptron.

Although rudimentary by today’s standards, the McCulloch-Pitts neuron model was a crucial step in the development of neural networks. It demonstrated how a network of interconnected artificial neurons could perform logical and computational tasks. While their initial work was limited in scope, it inspired future researchers to expand upon these concepts and develop more sophisticated neural network models.

Over the decades, neural networks have evolved significantly, with contributions from many researchers, including Frank Rosenblatt’s development of the perceptron in the late 1950s and the subsequent development of backpropagation and deep learning techniques in the 1980s and beyond. These advancements have led to the neural networks we use today for a wide range of AI applications.

What is the history of artificial neurons?

The history of artificial neurons dates back to the mid-20th century, when scientists began conceptualizing and modeling artificial neurons to understand and simulate the functioning of biological neurons. Two pivotal contributions in this journey were made by Warren McCulloch and Walter Pitts in their 1943 paper, “A Logical Calculus of the Ideas Immanent in Nervous Activity.” In this paper, they introduced the concept of a simplified mathematical model for a neuron known as the McCulloch-Pitts neuron.

The McCulloch-Pitts neuron was a binary model, meaning it could only produce two output states: firing (1) or not firing (0), based on the weighted sum of its inputs compared to a threshold. This simple model laid the foundation for later developments in artificial neural networks.

The true breakthrough in artificial neuron history came in the 1950s when Frank Rosenblatt developed the Perceptron. The Perceptron was an artificial neuron that could learn from data, and it played a critical role in early machine learning and pattern recognition research. It could be considered as one of the earliest neural network models.

While the Perceptron and the McCulloch-Pitts neuron were simplistic compared to today’s neural networks, they were the conceptual ancestors of modern artificial neural networks. Over the decades, the development of more complex neural network architectures and learning algorithms has allowed artificial neurons to become a fundamental building block of deep learning, revolutionizing fields like computer vision, natural language processing, and reinforcement learning. This historical progression has paved the way for the AI advancements we see today.

History of AI and Neural Networks

The history of artificial intelligence (AI) and neural networks is a fascinating journey that spans several decades, with significant advancements and setbacks along the way. Here’s a brief overview of the key milestones in the development of AI and neural networks:

Early Foundations (1940s-1950s)

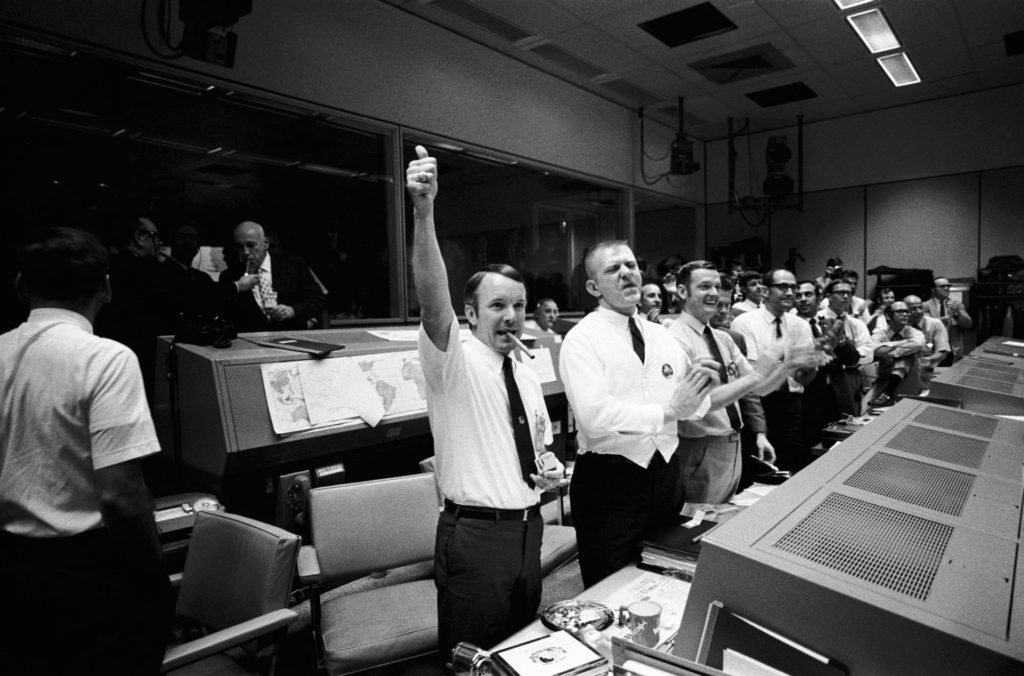

The 1940s marked a pivotal period in the history of artificial intelligence (AI) as the world witnessed the development of electronic computers. It was during this era that the theoretical foundations for AI were established by visionaries like Alan Turing and John von Neumann.

Alan Turing, a British mathematician and computer scientist, played a particularly influential role. His groundbreaking work, including the Turing machine concept, laid the groundwork for the theoretical principles that underpin modern computers and AI. In 1950, Turing took a monumental step by proposing the “Turing Test.” This test posed the question of whether a machine could exhibit intelligent behavior to the extent that it would be indistinguishable from human intelligence. This seminal idea not only sparked discussions about machine intelligence but also set a benchmark for AI researchers to aspire to.

The 1940s and 1950s thus marked the birth of AI as a field, driven by the ambitions of early pioneers like Turing and von Neumann, who foresaw the immense potential of computers to emulate human thought processes and behaviors. These foundational ideas continue to shape the landscape of AI research and development to this day.

Birth of Neural Networks (1940s-1950s)

Warren McCulloch and Walter Pitts made a significant contribution to the history of artificial neurons with their groundbreaking 1943 paper, “A Logical Calculus of the Ideas Immanent in Nervous Activity.” In this paper, they introduced the concept of artificial neurons as a simplified mathematical model to mimic the behavior of biological neurons in the human brain. They described how these artificial neurons could process binary inputs and produce binary outputs, depending on whether the weighted sum of inputs exceeded a certain threshold. This foundational concept marked the inception of artificial neurons and set the stage for the development of neural networks.

Building upon the work of McCulloch and Pitts, Frank Rosenblatt took a crucial step forward in the late 1950s with the creation of the Perceptron. The Perceptron was a tangible embodiment of the artificial neuron concept, and it added the ability to learn from data through a supervised learning process. While the Perceptron had limitations and could only handle linearly separable data, it represented a fundamental advancement in the field of neural networks, offering the promise of machine learning and pattern recognition capabilities. These early milestones paved the way for the subsequent evolution of artificial neural networks, ultimately leading to the complex and powerful neural network models we have today.

Early AI Research (1950s-1960s)

The Dartmouth Workshop in 1956 marked a significant milestone in the history of artificial intelligence (AI). This historic event, organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, is often regarded as the birth of AI as a distinct field of research. At this workshop, a group of visionary researchers came together with the ambitious goal of exploring the feasibility of creating intelligent machines.

John McCarthy, in particular, played a pivotal role by introducing the term “artificial intelligence” and outlining the principles that would guide AI research. The workshop discussions laid the foundation for future AI endeavors and set the stage for the development of AI as a scientific discipline.

In the years following the Dartmouth Workshop, early AI systems began to take shape. Researchers such as Allen Newell and Herbert A. Simon created the Logic Theorist, a program capable of proving mathematical theorems, and later the General Problem Solver, which aimed to tackle a wide range of problems by applying problem-solving rules. These pioneering AI systems demonstrated the potential of computational approaches to mimic human problem-solving abilities, setting the stage for further exploration and innovation in the field of AI.

The AI Winter (1970s-1980s)

The period known as the “AI Winter” during the 1970s and 1980s was marked by a significant slowdown in artificial intelligence research and development. This slowdown was primarily attributed to a combination of factors, including overhyped expectations and a gap between the lofty goals set for AI and the actual capabilities of the technology at the time.

One major contributing factor was the overly optimistic public perception of AI’s potential. Earlier AI pioneers had made grand promises about the rapid development of intelligent machines that could perform tasks at a human level. When these expectations weren’t met, there was a loss of confidence in the field, leading to decreased funding and support.

Additionally, AI research faced technical challenges related to limitations in computing power and data availability. Many AI systems of the time struggled to handle real-world complexity, and the algorithms used were often too simplistic to achieve the desired results.

The AI winter served as a sobering period when researchers reevaluated their approaches and expectations. It also laid the groundwork for later AI resurgence by highlighting the need for more realistic goals, improved algorithms, and advancements in computing technology. This historical perspective underscores the importance of managing expectations in the development of emerging technologies.

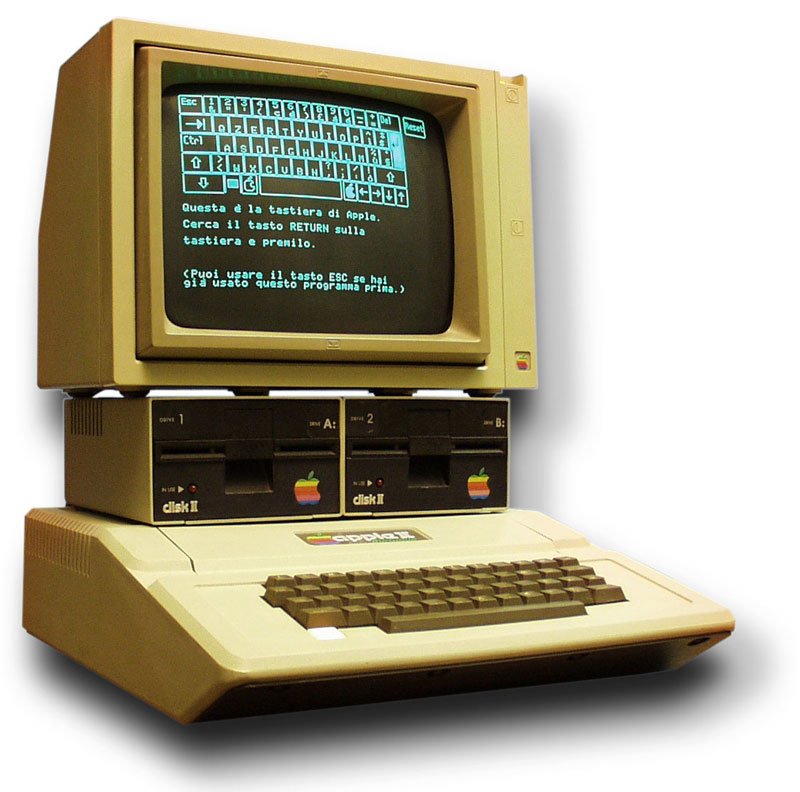

Connectionism and Backpropagation (1980s)

In the 1980s, a resurgence of interest in neural networks occurred, giving rise to a paradigm known as connectionism. Connectionism emphasized the use of neural networks as a means to model and understand cognitive processes and to solve complex problems in artificial intelligence (AI). This marked a departure from earlier AI approaches that were more rule-based and symbolic in nature.

One of the key developments during this period was the refinement and popularization of the backpropagation algorithm. Geoffrey Hinton, along with David Rumelhart and Ronald Williams, played a pivotal role in advancing this technique. Backpropagation, short for “backward propagation of errors,” became a crucial technique for training neural networks. It allowed for the adjustment of neural network weights based on the error or the difference between the predicted and actual outputs, enabling networks to learn from data and improve their performance over time.

The combination of connectionism and backpropagation laid the groundwork for the neural network renaissance that would follow in the late 20th century and continue into the 21st century. These advancements ultimately paved the way for the development of deep learning, which has become a cornerstone of contemporary AI, powering applications ranging from image recognition and natural language understanding to autonomous vehicles and recommendation systems.

Neural Network Renaissance (late 1980s-early 1990s)

In artificial intelligence and neural networks, the late 20th century witnessed a resurgence of interest and innovation. One of the critical milestones during this period was the development of more sophisticated neural network architectures, notably Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs).

RNNs introduced the concept of recurrent connections, allowing neural networks to process sequential data efficiently. This breakthrough made RNNs well-suited for tasks like natural language processing, speech recognition, and time series analysis. Meanwhile, CNNs were designed to excel in computer vision tasks, thanks to their ability to automatically learn hierarchical features from image data.

Despite these advancements, progress in neural network research was hampered by two significant challenges. First, computing power was limited, preventing researchers from training large, deep networks effectively. Second, data availability was scarce, especially in the context of labeled datasets necessary for supervised learning.

These limitations led to what is often referred to as the “AI winter,” where enthusiasm waned, and funding for AI research became scarce in the 1980s and early 1990s. However, this period of relative stagnation laid the groundwork for future breakthroughs, as researchers continued to refine neural network algorithms and gather the necessary computational resources and data for the next wave of AI advancements.

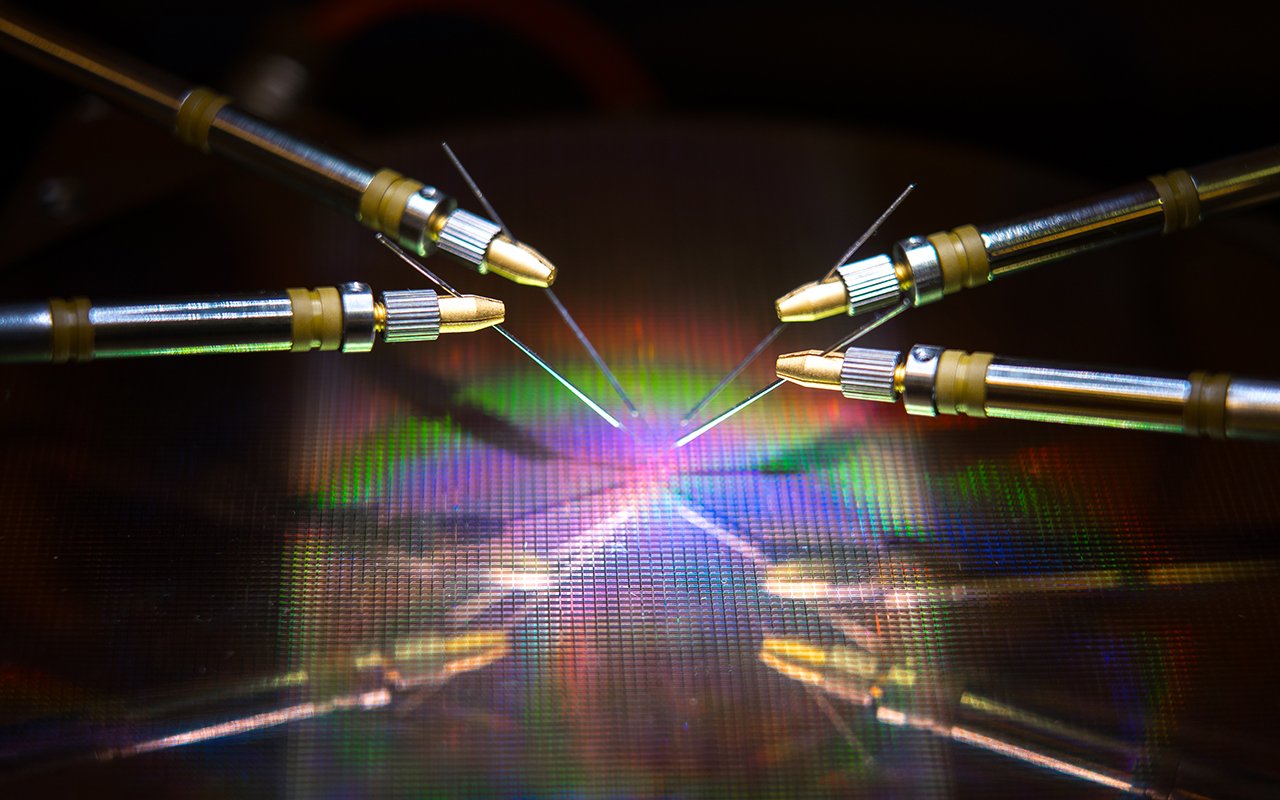

AI Resurgence (2010s-present)

The resurgence of AI in the 2010s marked a transformative period in the field, primarily driven by the remarkable advancements in deep learning. Deep learning techniques involve the training of deep neural networks, which are composed of multiple layers of interconnected artificial neurons. These neural networks, when provided with vast amounts of data, have exhibited unprecedented capabilities and have catalyzed breakthroughs in various AI domains.

One of the most prominent areas where deep learning has made a profound impact is computer vision. Deep neural networks, particularly Convolutional Neural Networks (CNNs), have revolutionized image recognition, enabling machines to identify objects and patterns with human-level accuracy. This has found applications in autonomous vehicles, medical image analysis, and more.

Similarly, in natural language processing, deep learning models like Transformers have set new benchmarks in tasks such as language translation, sentiment analysis, and question-answering systems. These advancements have paved the way for the development of virtual assistants and chatbots that can engage in more natural and context-aware conversations.

Leading tech giants like Google, Facebook, and OpenAI have been instrumental in driving these breakthroughs. Their substantial investments in research and development, coupled with access to vast amounts of data, computational resources, and talent, have accelerated the progress of AI and deep learning, pushing the boundaries of what is possible in artificial intelligence. The collaborative efforts of academia and industry have collectively shaped the AI landscape, making the 2010s a pivotal decade in the history of artificial intelligence.

Contemporary Applications (2010s-present)

AI and neural networks have seamlessly integrated into numerous facets of our daily lives, ushering in a new era of technological convenience and innovation. Virtual assistants, such as Siri and Alexa, exemplify how AI-powered natural language processing and voice recognition have revolutionized the way we interact with our devices. These digital companions not only answer questions but also control smart home devices, set reminders, and provide personalized recommendations, making them indispensable in our homes.

Self-driving cars represent another monumental application of AI and neural networks. These vehicles utilize neural networks for tasks like object detection, path planning, and decision-making, enhancing safety and convenience on our roadways. As they become more prevalent, they promise to transform our transportation systems fundamentally.

In healthcare, AI-driven diagnostics leverage neural networks to analyze medical images, detect anomalies, and aid in disease diagnosis. This not only expedites the diagnostic process but also improves accuracy, potentially saving lives.

From finance to entertainment, education to manufacturing, AI and neural networks continue to permeate various sectors, enhancing efficiency, productivity, and user experiences. With their ever-expanding capabilities, the influence of these technologies in our daily lives is poised to grow, shaping the future in ways we can only begin to imagine.

The history of AI and neural networks indeed reflects a rollercoaster of enthusiasm and setbacks. Early promises of AI in the 1950s were followed by periods of disillusionment, commonly known as “AI winters,” due to limited progress and high expectations. However, recent years have witnessed a remarkable resurgence in AI, fueled by deep learning and neural networks. Breakthroughs in computer vision, natural language understanding, and other domains have reignited optimism. Researchers and engineers are pushing boundaries, embracing the potential of AI to revolutionize healthcare, finance, transportation, and more. As we enter this new era, the journey of AI and neural networks continues to evolve, offering promising solutions to complex challenges.