The profound journey from Alan Turing’s visionary contributions to the modern world of the Internet of Things (IoT) represents a remarkable saga of technological evolution. Alan Turing, a pioneering mathematician and computer scientist, laid the theoretical groundwork for the digital age with his revolutionary concepts, such as the Turing machine, in the mid-20th century. Little did he know that his ideas would set in motion a cascade of innovations leading to the connected, data-driven landscape we now know as the Internet of Things.

Alan Turing’s pioneering work during World War II, notably in breaking the Enigma code, was instrumental in the development of early computers. His concept of the Turing machine, an abstract device capable of executing any algorithmic computation, became the cornerstone upon which modern computers operate. This marked the initial step in the journey towards the IoT.

Fast-forward to the present day, and we find ourselves immersed in a digital realm where billions of everyday objects, from smart refrigerators to industrial sensors, are interconnected through the Internet, forming what we now call the Internet of Things. The evolution of IoT is nothing short of astonishing, bridging the gap between the physical and digital worlds.

Table of Contents

What are the 4 types of IoT?

The Internet of Things (IoT) encompasses a diverse range of applications and use cases, and it can be categorized into four primary types based on their functionality and scope:

Consumer IoT (CIoT)

This category includes IoT devices and applications designed for individual consumers. CIoT devices are commonly found in smart homes and include items like smart thermostats, connected appliances, wearable fitness trackers, and voice-activated assistants like Amazon Alexa or Google Home. These devices are geared toward enhancing convenience, energy efficiency, and lifestyle.

Commercial IoT (CIoT)

CIoT focuses on applications within business and commercial settings. It includes solutions like asset tracking, inventory management, and industrial automation. For example, in agriculture, sensors can monitor soil conditions and crop health, while in retail, IoT devices can manage inventory levels and enhance customer experiences through personalized marketing.

Industrial IoT (IIoT)

IIoT is a subset of IoT specifically tailored for industrial and manufacturing environments. It involves the use of sensors, data analytics, and automation to improve operational efficiency, reduce downtime, and enhance safety. IIoT applications can be found in factories, power plants, logistics, and supply chain management.

Enterprise IoT (EIoT)

EIoT refers to IoT solutions that are deployed within large organizations or enterprises to improve processes and workflows. It includes applications such as facility management, energy monitoring, and smart building systems. EIoT can help organizations optimize resource utilization and reduce operational costs.

These four types of IoT represent the diverse range of applications and industries that benefit from the connectivity and data-driven capabilities of IoT technology. As IoT continues to evolve, it is expected that these categories will expand, overlap, and lead to even more innovative use cases across various sectors, driving greater efficiency, productivity, and convenience in our increasingly interconnected world.

What are IoT examples?

The Internet of Things (IoT) is a vast and rapidly expanding network of interconnected devices that can communicate and share data over the internet. IoT has found applications in various domains, transforming the way we live and work. Here are some notable IoT examples:

Smart Home Devices

IoT has revolutionized the way we manage our homes. Smart thermostats like Nest allow remote temperature control, while smart lights like Philips Hue can be controlled via smartphones. Voice-activated virtual assistants like Amazon Echo and Google Home automate tasks and answer questions.

Wearable Health Devices

IoT is enhancing healthcare with devices like Fitbit and Apple Watch, which monitor vital signs, track physical activity, and even detect health anomalies. These wearables can transmit data to healthcare providers for remote monitoring and analysis.

Smart Cities

Municipalities are using IoT to improve urban life. Smart traffic lights can optimize traffic flow, reducing congestion and emissions. Smart waste management systems monitor trash levels in bins, optimizing collection routes and schedules.

Industrial IoT (IIoT)

In manufacturing and industry, IoT sensors collect data on machinery performance and product quality in real time. This allows predictive maintenance, reducing downtime and improving efficiency.

Logistics and Supply Chain

IoT-enabled tracking devices provide real-time visibility into the movement and condition of goods during transit. This helps streamline logistics operations and enhance security.

Connected Vehicles

IoT plays a key role in the development of autonomous vehicles. These cars use sensors and data connectivity to navigate, communicate with other vehicles, and make driving safer.

Environmental Monitoring

IoT sensors collect data on air quality, pollution levels, and climate conditions. This information aids in environmental protection and disaster prediction.

Retail and Inventory Management

IoT is used for inventory tracking, ensuring products are always in stock. Retailers also use IoT to enhance customer experiences with smart shelves and personalized shopping recommendations.

Energy Management

Smart grids and IoT-enabled devices help optimize energy consumption. Consumers can monitor and control their energy usage, promoting sustainability.

These IoT examples represent just a fraction of the possibilities in this rapidly expanding field. As technology continues to evolve, we can expect IoT to continue reshaping industries and improving various aspects of our daily lives.

Why is IoT used?

The Internet of Things (IoT) is used for a multitude of reasons, primarily driven by its ability to enhance efficiency, convenience, and decision-making across various sectors. Here are some key reasons why IoT is widely adopted:

Efficiency and Automation

IoT enables the automation of tasks and processes. Devices and sensors can collect data in real time, allowing for better resource management, reduced energy consumption, and streamlined operations. For example, smart thermostats can adjust temperatures based on occupancy to save energy.

Data Collection and Analysis

IoT devices generate vast amounts of data that can be analyzed to gain insights and make informed decisions. In agriculture, soil sensors can provide data on moisture levels, helping farmers optimize irrigation and crop yields.

Improved Safety and Security

IoT enhances safety and security through applications like smart home security systems, wearable health devices, and industrial sensors that monitor equipment for potential hazards.

Cost Reduction

IoT can lead to cost savings by reducing maintenance costs (predictive maintenance), optimizing logistics and supply chains, and minimizing waste in manufacturing processes.

Enhanced User Experience

In the consumer space, IoT devices enhance the user experience. Smart speakers, for instance, offer voice-activated convenience, while wearable fitness trackers help individuals monitor their health and fitness goals.

Environmental Impact

IoT can contribute to sustainability efforts by monitoring and reducing energy consumption, optimizing transportation routes, and reducing waste.

Healthcare and Telemedicine

IoT plays a crucial role in healthcare, enabling remote patient monitoring, early disease detection, and the delivery of telemedicine services, particularly valuable in remote or underserved areas.

Industrial and Manufacturing Advancements

In the industrial sector, IoT technologies are central to Industry 4.0, enabling the creation of “smart factories” with interconnected machines, predictive maintenance, and real-time quality control.

Urban Planning

IoT aids in creating smarter cities by monitoring traffic, air quality, and infrastructure. This data can be used to improve city planning and resource allocation.

Supply Chain Management

IoT enhances supply chain visibility by tracking goods and materials in real-time, reducing delays and inventory costs.

In essence, IoT is used to harness the power of data and connectivity to improve efficiency, productivity, safety, and sustainability across various domains, revolutionizing the way we live, work, and interact with the world around us.

The Evolution of IoT

The journey from Alan Turing’s pioneering work in the mid-20th century to the Internet of Things (IoT) in the 21st century represents a significant evolution in computing, technology, and connectivity. Let’s delve into this transition in detail:

Alan Turing and the Birth of Modern Computing

Alan Turing, a British mathematician, and computer scientist, is an iconic figure in the history of computing. His contributions during World War II and the development of the Turing machine profoundly shaped the course of modern computing and earned him the moniker “the father of computer science.”

During World War II, Turing played a pivotal role in the Allied efforts by breaking the Enigma code used by the German military. His work at Bletchley Park, where he led a team of codebreakers, was instrumental in deciphering encrypted messages, providing critical intelligence that significantly impacted the outcome of the war.

However, Turing’s enduring legacy extends beyond cryptography. He conceptualized the Turing machine, a theoretical construct that became the foundation of modern computing. The Turing machine is a simple yet powerful model of computation that consists of an infinite tape and a read/write head. It can simulate any algorithmic computation, making it a universal computing machine.

The principles of the Turing machine, which include executing instructions in a sequential and algorithmic manner, laid the groundwork for the design and functionality of digital computers. Today’s computers, from personal laptops to supercomputers, operate based on the fundamental principles Turing established. His visionary ideas continue to influence and inspire advances in computer science, artificial intelligence, and technology, shaping the digital world in which we live. Alan Turing’s legacy endures as a testament to the transformative power of mathematical and computational thinking.

The Emergence of Early Computers

After Alan Turing’s groundbreaking theoretical work on computation and the development of the Turing machine, the world entered a transformative era where electronic computers transitioned from theoretical constructs to tangible realities. This shift laid the foundation for the modern computing landscape we now take for granted.

One of the earliest and most iconic electronic computers to emerge from this transition was the ENIAC (Electronic Numerical Integrator and Computer), completed in 1945. ENIAC was a colossal machine, occupying a large room and consisting of thousands of vacuum tubes and miles of wiring. Its primary purpose was to perform complex numerical calculations, and it was initially employed for scientific and military applications, most notably in the development of atomic weapons during World War II. Despite its immense size and limited capabilities compared to today’s standards, ENIAC represented a significant leap forward in computing technology.

Shortly after ENIAC, in 1951, the UNIVAC (Universal Automatic Computer) was introduced. UNIVAC was one of the first commercially produced computers and marked a pivotal moment in computing history. It was more versatile and capable than its predecessors, making it suitable for a broader range of applications beyond scientific and military purposes. UNIVAC’s commercial availability helped pave the way for the widespread adoption of computers in various industries, from business and finance to scientific research.

In essence, the transition from Turing’s theoretical concepts to real-world electronic computers, epitomized by machines like ENIAC and UNIVAC, marked the birth of modern computing. These early computers, though large and expensive, demonstrated the immense potential of computational technology, foreshadowing the computing revolution that would follow in the decades to come.

The Evolution of Computing

Over the decades, the evolution of computing technology has been nothing short of remarkable. Beginning with the pioneering work of Alan Turing and his theoretical framework for computation, the transition to practical, real-world computing saw several pivotal advancements.

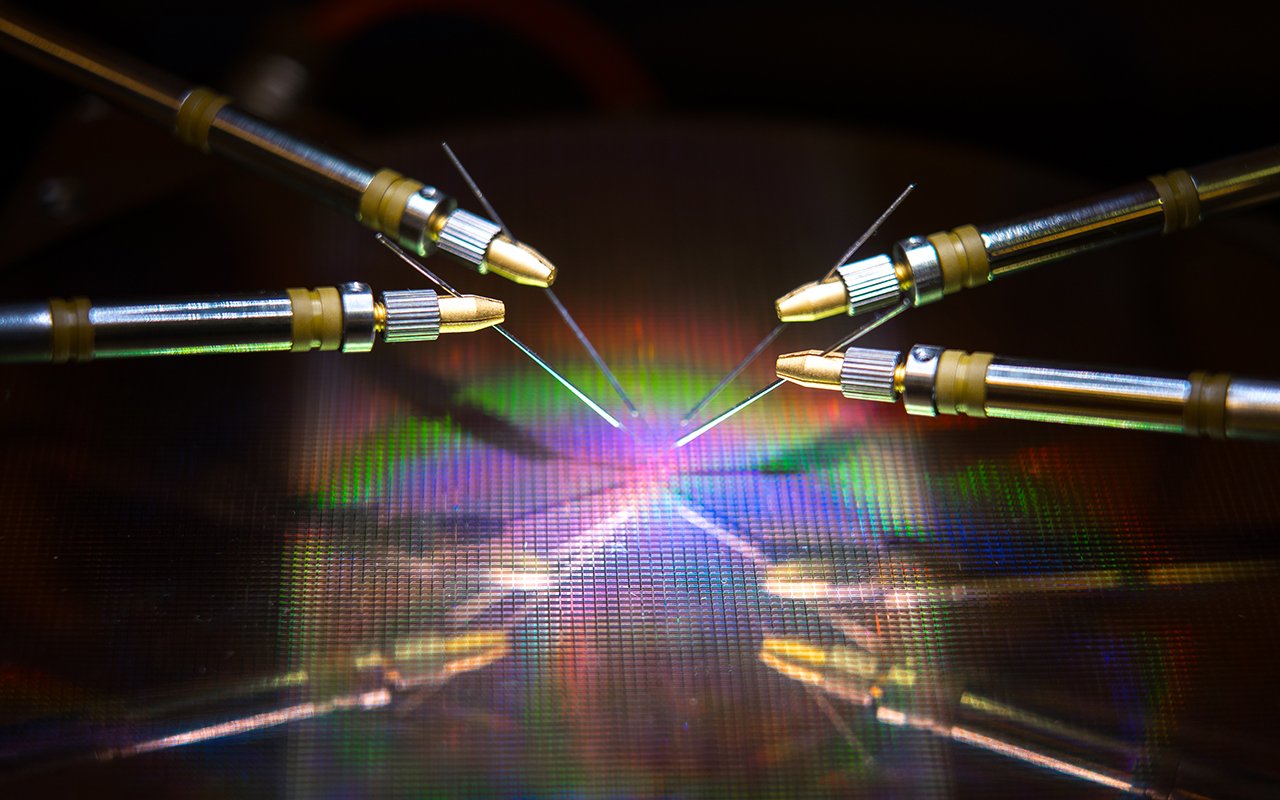

One significant leap was the replacement of vacuum tubes with transistors in the mid-20th century. Transistors, which acted as electronic switches, revolutionized computing by making machines significantly smaller, more reliable, and energy-efficient. This shift paved the way for the development of smaller and more powerful computers that could be used beyond specialized scientific or military applications.

However, the true game-changer came in the late 1950s with the invention of the integrated circuit, often referred to as the microchip. Jack Kilby and Robert Noyce independently conceived this revolutionary technology, which allowed for the integration of multiple electronic components onto a single semiconductor substrate. This breakthrough not only reduced the size of computers but also dramatically increased their processing power and reliability.

As the years progressed, advancements in semiconductor manufacturing led to the miniaturization of components, making computers more affordable and accessible. The 1970s and 1980s witnessed the birth of personal computers, like the iconic Apple II and IBM PC, which brought computing into homes and offices around the world. These personal computers laid the foundation for the digital revolution, empowering individuals and businesses with newfound computational capabilities and setting the stage for the information age.

The transition from vacuum tubes to transistors and the subsequent invention of the integrated circuit marked pivotal moments in the history of computing. These developments fueled the miniaturization and accessibility of computers, democratizing access to computing power and setting the stage for the transformative impact of personal computers on society and the global economy.

The Rise of the Internet

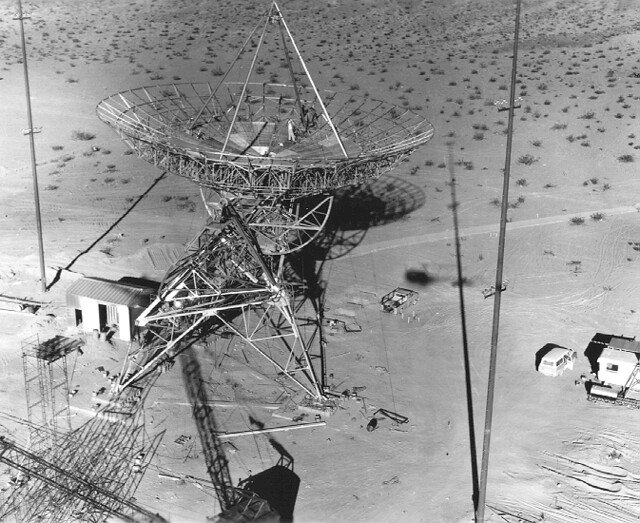

The development of the Internet, a transformative force in the modern world, can be traced back to key milestones in the late 20th century. Two pivotal moments in this journey were the creation of ARPANET and the World Wide Web.

In the late 1960s, the U.S. Department of Defense’s Advanced Research Projects Agency (ARPA) initiated the development of ARPANET, laying the groundwork for the modern internet. ARPANET was conceived as a robust, decentralized network of computers designed to withstand nuclear attacks. This early network introduced the concept of packet switching, which allowed data to be broken into packets and routed independently, revolutionizing data transmission. ARPANET’s success paved the way for the adoption of the Transmission Control Protocol and Internet Protocol (TCP/IP) as the standardized communication protocol for the emerging internet.

Fast forward to 1989 when Tim Berners-Lee, a British computer scientist, introduced the World Wide Web (WWW). Berners-Lee’s creation was a revolutionary concept that made the internet user-friendly and accessible to the masses. He developed the HTTP (Hypertext Transfer Protocol) and HTML (Hypertext Markup Language) to enable the creation of web pages and hyperlinks. This breakthrough allowed users to easily navigate and share documents and resources across the internet.

Together, ARPANET’s infrastructure and the World Wide Web’s user-friendly interface formed the foundation for the global, interconnected web we know today. This dynamic combination unleashed a wave of innovation, communication, and collaboration, transforming industries, education, commerce, and virtually every aspect of modern life. The internet has become an essential part of our daily routines, connecting people and information across the globe, revolutionizing communication, and shaping the digital age.

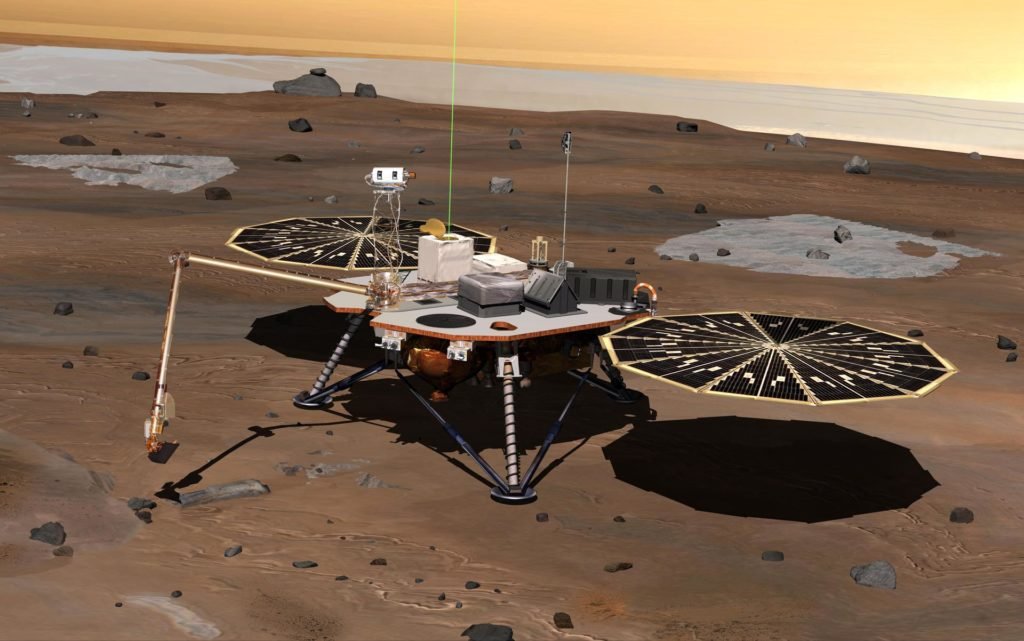

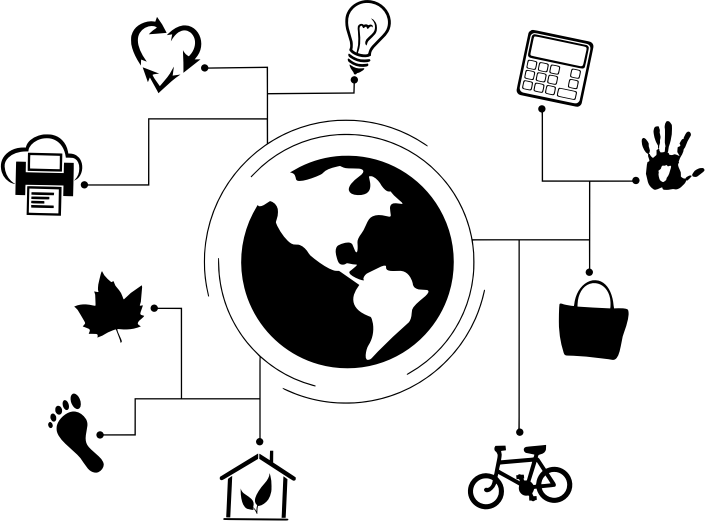

IoT: The Next Frontier

The Internet of Things (IoT) stands as a transformative leap in the realm of computing and connectivity. It encompasses a vast network of physical objects or “things” that are equipped with a diverse array of sensors, software, and cutting-edge technologies, all interconnected to facilitate the seamless exchange of data over the internet. This fusion of the physical and digital worlds enables a plethora of applications and possibilities that were once the stuff of science fiction.

IoT devices, the linchpin of this technological revolution, come in a staggering variety. They span from the familiar, such as smart thermostats that intuitively adjust room temperatures for energy efficiency and comfort, to wearable fitness trackers that monitor our health and activity levels with remarkable precision. Beyond the consumer realm, IoT extends its reach into industries like manufacturing, agriculture, healthcare, and transportation. Industrial sensors oversee machinery, ensuring timely maintenance and optimizing production processes, while autonomous vehicles employ IoT technologies to navigate, communicate with infrastructure, and enhance road safety.

At its core, IoT is about gathering data from the physical world, analyzing it intelligently, and leveraging the insights derived to enhance efficiency, productivity, and convenience across diverse domains. This paradigm shift in connectivity and data utilization is reshaping how we live, work, and interact with the world, promising a future marked by unprecedented innovation and efficiency.

Key Characteristics of IoT

The Internet of Things (IoT) is a technological paradigm characterized by its multifaceted components and capabilities, each serving a specific purpose in the broader ecosystem.

At the heart of IoT are sensors and data. IoT devices are embedded with an array of sensors, including temperature, humidity, motion, and more. These sensors act as the eyes and ears of the IoT, constantly collecting real-time data from the physical world. This data forms the foundation for informed decision-making, enabling organizations and individuals to monitor and control various aspects of their environments.

Connectivity is another fundamental pillar of IoT. Devices leverage diverse communication protocols such as Wi-Fi, Bluetooth, cellular networks, and low-power options like LoRaWAN to establish connections. This connectivity extends IoT’s reach, allowing devices to transmit data to centralized servers or to communicate directly with each other in intricate networks.

Interoperability is vital for the seamless functioning of IoT ecosystems. Devices from different manufacturers and platforms must work together harmoniously. Open standards and protocols play a critical role in ensuring that IoT devices can exchange data and collaborate effectively.

The copious amounts of data generated by IoT devices necessitate advanced analytics tools. Big data and analytics enable the extraction of meaningful insights from the massive datasets produced by IoT. This insight-driven decision-making is integral for optimizing processes, reducing costs, and enhancing efficiency.

Moreover, IoT is synonymous with automation. It facilitates automation across various domains, from smart homes, where devices can respond to user preferences and environmental conditions autonomously, to industrial processes, where IoT enables predictive maintenance and real-time control. Automation enhances efficiency and convenience, freeing up human resources for more complex tasks.

So, IoT’s core components—sensors, connectivity, interoperability, big data analytics, and automation—coalesce to create a transformative force that revolutionizes industries, improves quality of life, and shapes the future of technology and connectivity.

Challenges and Concerns

IoT’s widespread adoption and integration into various aspects of our lives bring immense benefits, but they also introduce critical challenges that must be addressed. Two of the most pressing concerns are security and privacy, as well as scalability and interoperability.

- Security and Privacy Concerns

IoT devices often collect and transmit sensitive data, ranging from personal information in smart homes to critical data in industrial applications. Ensuring the security of these devices and the data they handle is paramount. Weaknesses in device security can lead to unauthorized access, data breaches, and potentially even physical harm in cases like connected vehicles or medical devices. Therefore, robust security protocols, encryption, and regular updates are essential to safeguard IoT ecosystems.

Additionally, privacy concerns arise from the vast amount of data generated by IoT devices. Users may be unaware of the extent to which their data is collected and used. Striking a balance between data utility and privacy is a complex challenge that necessitates clear regulations and user consent mechanisms.

- Scalability and Interoperability

As the IoT ecosystem continues to expand rapidly, ensuring scalability and interoperability becomes increasingly critical. Scalability refers to the ability of IoT infrastructure to handle a growing number of devices and data. It requires robust network architecture and data management solutions capable of accommodating the IoT’s exponential growth.

Interoperability concerns revolve around the ability of different IoT devices and platforms to work seamlessly together. As the IoT landscape comprises diverse devices and protocols, achieving compatibility and integration among them is essential to maximize the system’s efficiency and utility. Industry standards and open protocols are crucial in addressing this challenge.

Addressing the security, privacy, scalability, and interoperability challenges posed by IoT is fundamental to unlocking its full potential while maintaining trust and reliability in IoT applications. Collaboration among industry stakeholders, government bodies, and cybersecurity experts is essential to develop and implement effective solutions.

The evolution from Alan Turing’s pioneering work to the Internet of Things (IoT) era signifies an astounding journey through the annals of computing and connectivity. Turing’s theoretical concepts, such as the Turing machine, laid the foundation for modern computing, paving the way for the development of electronic computers and, eventually, the IoT.

Over time, technology underwent a profound transformation. Early mainframes like ENIAC gave way to increasingly powerful and miniaturized computers, culminating in the advent of personal computing. Simultaneously, the birth of the internet, catalyzed by ARPANET and the World Wide Web, revolutionized global connectivity, enabling information sharing on an unprecedented scale.

The IoT represents the latest chapter in this narrative. It involves a web of interconnected smart devices, equipped with sensors and capable of real-time data exchange. This interconnectedness has permeated diverse domains, from smart homes to healthcare and manufacturing, fundamentally reshaping how we interact with the world.

The journey from Turing’s theoretical constructs to the IoT epitomizes the astonishing progress in technology, shaping our lives and industries in ways once deemed unimaginable. It underscores the relentless march of innovation, pushing the boundaries of what is possible in the digital age.