The evolution of computing has been intricately woven into the fabric of human progress, with a pivotal chapter unfolding in the form of the microprocessor revolution. This transformative journey, beginning in the early 1970s, marked a paradigm shift from cumbersome computing machines to the compact powerhouses that drive our modern digital age. At the forefront of this revolution was the Intel 4004, a modest 4-bit microprocessor that laid the foundation for a technological landscape yet to be explored.

Before the advent of microprocessors, computers were massive, resource-intensive entities, confined to specialized environments and largely inaccessible to the broader population. The breakthrough came with the Intel 4004 in 1971, a pioneering chip that consolidated the functions of a computer’s central processing unit onto a single integrated circuit. This not only revolutionized the scale and efficiency of computing but also set in motion a cascade of advancements that would redefine the possibilities of technology.

As Intel continued to push the boundaries with subsequent releases like the 8008 and 8080, other players such as Motorola and Zilog joined the race, contributing to the rapid evolution of microprocessor technology. The introduction of the 8086 marked the inception of the x86 architecture, which became the cornerstone for numerous Intel processors, forming the backbone of modern computing systems.

The impact of microprocessors reached far beyond traditional computing realms. From the emergence of personal computers to the proliferation of compact electronic devices, these technological marvels became integral to daily life. The constant pursuit of smaller transistors and increased computational power, as encapsulated by Moore’s Law, fueled an exponential growth curve that has shaped our digital landscape.

Table of Contents

In this exploration of the microprocessor’s history and evolution, we delve into the intricate details of technological milestones, from the early days of the Intel 4004 to the cutting-edge processors that drive innovation today. Join us on a journey through time, unraveling the story of how a small chip sparked a revolution, transforming the way we live, work, and connect in the digital era.

Which was the first microprocessor?

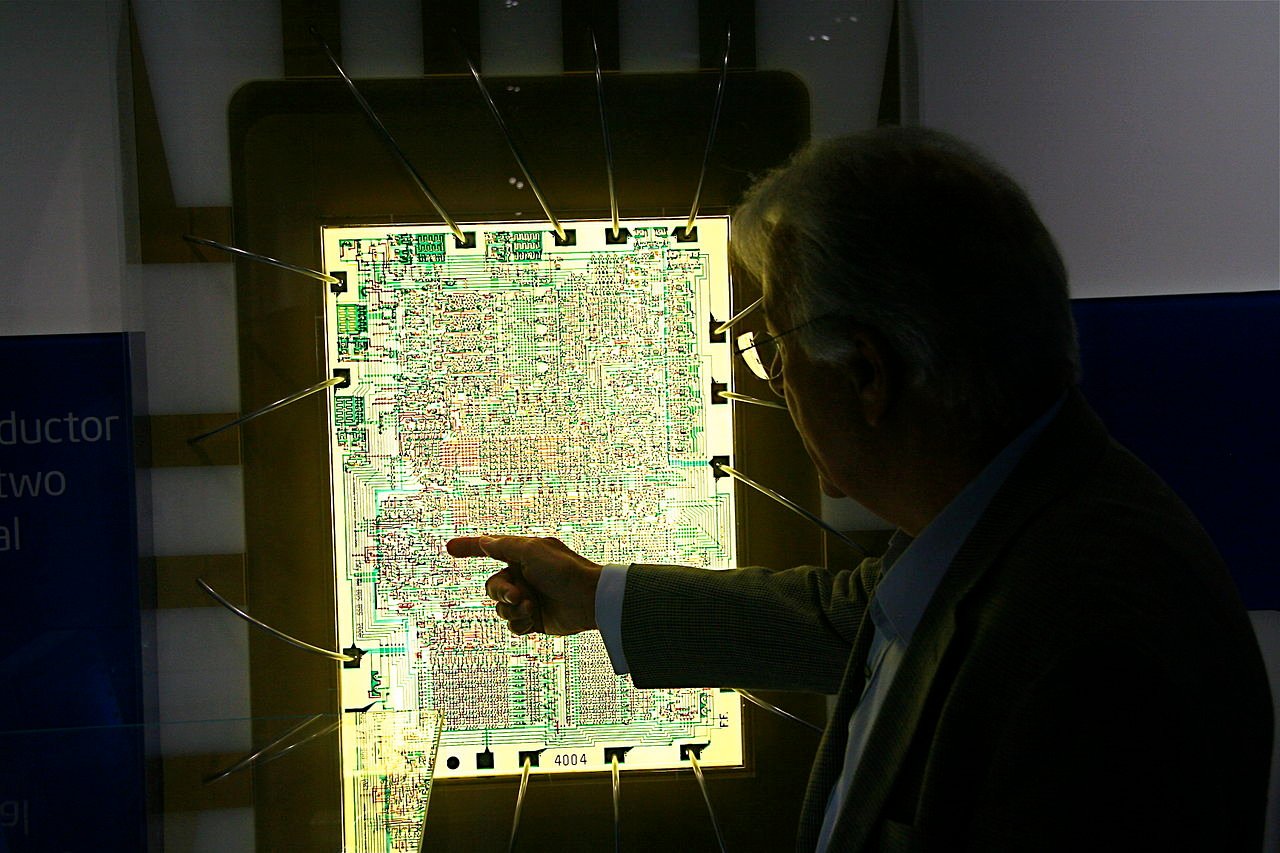

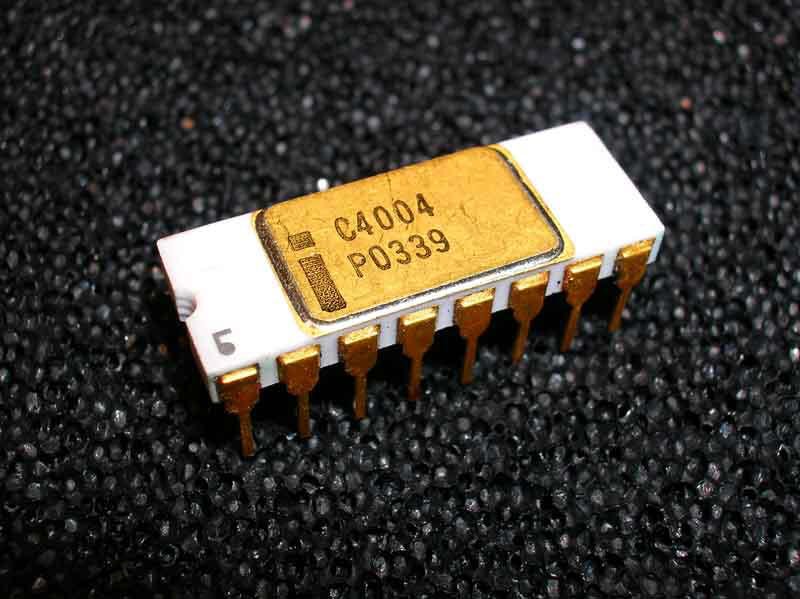

The Intel 4004 holds the distinction of being the world’s first microprocessor. Introduced in 1971 by Intel Corporation, the 4004 was a groundbreaking achievement in the realm of computing. Designed by Federico Faggin, Ted Hoff, and Stanley Mazor, the 4004 was a 4-bit processor initially intended for use in calculators. What set it apart was its integration of central processing unit functions onto a single integrated circuit, making it a compact yet powerful solution. With a clock speed of 740 kHz and the ability to execute around 60,000 operations per second, the Intel 4004 laid the foundation for subsequent advancements in microprocessor technology, ultimately shaping the trajectory of modern computing.

What company invented the microprocessor?

Intel Corporation is credited with inventing the microprocessor, marking a pivotal moment in the history of computing. In 1971, a team of Intel engineers, including Federico Faggin, Ted Hoff, and Stanley Mazor, developed the world’s first microprocessor, the Intel 4004. This revolutionary 4-bit processor was a single-chip solution that integrated the essential components of a computer’s central processing unit onto a tiny silicon wafer.

The Intel 4004 was initially designed for use in calculators but quickly demonstrated its potential for broader applications. Its compact size, affordability, and efficiency paved the way for a new era in computing technology. Following the success of the 4004, Intel continued to innovate, releasing subsequent microprocessors like the 8008 and 8080, each offering increased capabilities and versatility.

Intel’s groundbreaking contribution to microprocessor development extended beyond individual products. The company played a crucial role in shaping the x86 architecture, which became the foundation for a wide range of processors, establishing Intel as a leader in the semiconductor industry. The invention of the microprocessor by Intel laid the groundwork for the evolution of personal computers and the proliferation of digital technology, influencing various aspects of modern life.

What is the story of Intel 4004?

The Intel 4004, introduced in 1971, is a seminal chapter in the history of microprocessors. Conceived by Intel engineers Federico Faggin, Ted Hoff, and Stanley Mazor, this groundbreaking 4-bit processor represented a paradigm shift in computing technology. Its creation stemmed from a need for a versatile and compact processor for Busicom, a Japanese calculator manufacturer.

The Intel 4004, with a clock speed of 740 kHz, integrated 2,300 transistors onto a single chip, a revolutionary concept at the time. Designed for use in calculators, it consolidated the functions of a central processing unit into a small, cost-effective package. This innovation not only reduced the size and cost of computing devices but also paved the way for the development of microprocessors that would become the backbone of modern computing.

The 4004’s architecture included 16 4-bit registers and could address 4,096 individual bits of memory. Although initially met with skepticism, its success laid the groundwork for further advancements in microprocessor technology. Intel’s subsequent releases, such as the 8008 and 8080, built upon the 4004’s achievements, propelling the microprocessor revolution forward and transforming computing from a realm of large, specialized machines to one of compact and accessible devices. The Intel 4004 stands as a pioneer, marking the dawn of a new era in computing.

Dawn of the Microprocessor: Pioneering the Revolution in Computing

The “Dawn of the Microprocessor” refers to a crucial period in the history of computing when the first microprocessors were introduced. A microprocessor is a central processing unit (CPU) on a single integrated circuit (IC) that contains the functions of a computer’s central processing unit. This innovation revolutionized the world of computing by making it possible to create smaller, more powerful, and more affordable computers.

The key events leading to the dawn of the microprocessor include

Early Computing Background

Before the advent of the microprocessor era, computers existed as colossal and financially burdensome machines primarily harnessed by governments, research institutions, and large corporations. These early computing behemoths, prevalent in the mid-20th century, operated on the foundation of discrete components like vacuum tubes and transistors.

Constructed with these rudimentary elements, computers demanded substantial physical space and consumed significant power resources. The intricate network of vacuum tubes and transistors comprised the essential building blocks of these machines, facilitating data processing and computation. However, this architecture came with inherent limitations, including high maintenance requirements, limited processing speed, and an imposing physical footprint.

The extensive use of vacuum tubes not only posed challenges in terms of overheating but also necessitated frequent replacements, making these systems both operationally complex and financially burdensome. The reliance on such bulky, resource-intensive technologies restricted widespread access to computing power, confining its utility to entities with substantial financial resources.

The advent of the microprocessor marked a revolutionary departure from this era of cumbersome computing. By consolidating essential functions onto a single integrated circuit, microprocessors transformed computing into a more accessible, efficient, and cost-effective realm, laying the foundation for the democratization of technology and the modern digital age.

Intel 4004 – The First Microprocessor (1971)

In 1971, the landscape of computing underwent a seismic shift with the introduction of the Intel 4004, hailed as the world’s inaugural microprocessor. Conceived by the ingenious trio of Intel engineers Federico Faggin, Ted Hoff, and Stanley Mazor, the 4004 was initially envisioned to power calculators.

The significance of the Intel 4004 lay in its revolutionary design as a 4-bit processor, setting it apart from its predecessors. What made this microprocessor truly groundbreaking was its consolidation of multiple transistor functions into a singular chip. This integration not only bestowed unprecedented computational power but also marked a transformative moment in technology. The amalgamation of diverse functions into a solitary integrated circuit drastically reduced the physical size of computing devices, making them more compact and accessible.

The 4004’s impact was threefold, as it not only ushered in a new era of miniaturized yet powerful computing but also significantly lowered production costs and reduced power consumption. This pioneering microprocessor laid the foundation for subsequent advancements, influencing the trajectory of technology and setting the stage for the microprocessor revolution that would redefine the realms of computation and electronics.

Microprocessor Evolution

Following the groundbreaking introduction of the Intel 4004, Intel continued to push the boundaries of microprocessor technology with subsequent releases. The Intel 8008, launched as the world’s first 8-bit microprocessor in 1972, marked a significant advancement. An enhanced architecture provided increased computational power, making it more versatile for a broader range of applications.

This evolution paved the way for further innovations, laying the foundation for the 8080 microprocessor, released in 1974. The Intel 8080, a successor to the 8008, demonstrated even greater capabilities with an expanded 8-bit architecture, higher clock speeds, and improved overall performance. These successive releases not only reflected Intel’s commitment to pushing the limits of processing power but also played a pivotal role in expanding the scope of microprocessor applications.

Simultaneously, the microprocessor landscape saw the entry of other key players. Companies like Motorola and Zilog entered the market, contributing to the rapid development and diversification of microprocessor technology. This competitive environment fostered innovation, leading to a proliferation of processors with varied features and capabilities. The collective efforts of these companies propelled the microprocessor revolution forward, shaping a landscape where computing power became increasingly accessible, versatile, and integral to various industries and everyday life.

8086 and x86 Architecture

In the pivotal year of 1978, Intel unveiled the 8086 microprocessor, a momentous development that would reverberate through the realms of computing. Signifying the commencement of the x86 architecture, the 8086 laid the cornerstone for a lineage of processors that would come to define the landscape of modern computing. This architecture, characterized by its instruction set and memory-addressing capabilities, emerged as a unifying force, providing a standardized platform for software developers and hardware manufacturers.

The x86 architecture’s impact was far-reaching, shaping the trajectory of Intel’s processor designs for decades to come. The 8086 itself boasted a 16-bit architecture, a significant leap from its predecessors, enabling more extensive memory addressing and enhanced computational capabilities. This architectural foundation became the bedrock for subsequent iterations, including the 80286, 80386, and the Pentium series, solidifying Intel’s dominance in the microprocessor market.

Decades after its inception, the x86 architecture continues to be ubiquitous, underpinning a vast array of computing devices. From personal computers to servers and beyond, the legacy of the 8086 resonates in contemporary technology. The x86 architecture’s adaptability and scalability have ensured its enduring relevance, making it a linchpin in the evolution of processors and a testament to the enduring impact of Intel’s pioneering strides in 1978.

Microprocessor Impact

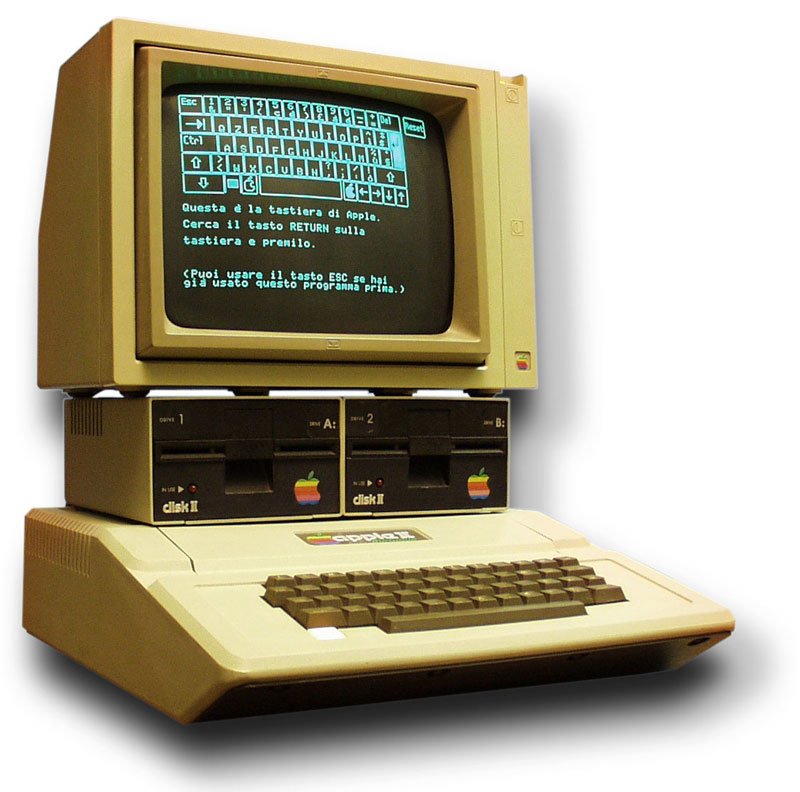

The advent of microprocessors marked a profound turning point in the trajectory of computing technology. This transformative innovation ushered in an era that saw the emergence of personal computers (PCs), fundamentally altering the landscape of digital accessibility. As microprocessors evolved, they became the heart of PCs, making computing power accessible to individuals and smaller businesses. The democratization of computing capabilities catalyzed a revolution, enabling a broader demographic to harness the benefits of information technology.

Beyond the realm of traditional computing, microprocessors played a pivotal role in the diversification of electronic devices. From the inception of the Intel 4004 and its successors, these tiny yet powerful chips enabled the creation of more compact and affordable electronic gadgets. The ripple effect was felt across industries, ranging from the ubiquity of calculators and digital watches to the development of increasingly sophisticated systems.

The integration of microprocessors into diverse applications not only enhanced functionality but also facilitated the production of consumer-friendly, cost-effective devices. In essence, the introduction of microprocessors transcended the boundaries of computing, catalyzing an era where technology became not just a tool for specialists but an integral part of everyday life for people around the globe.

Technological Advancements

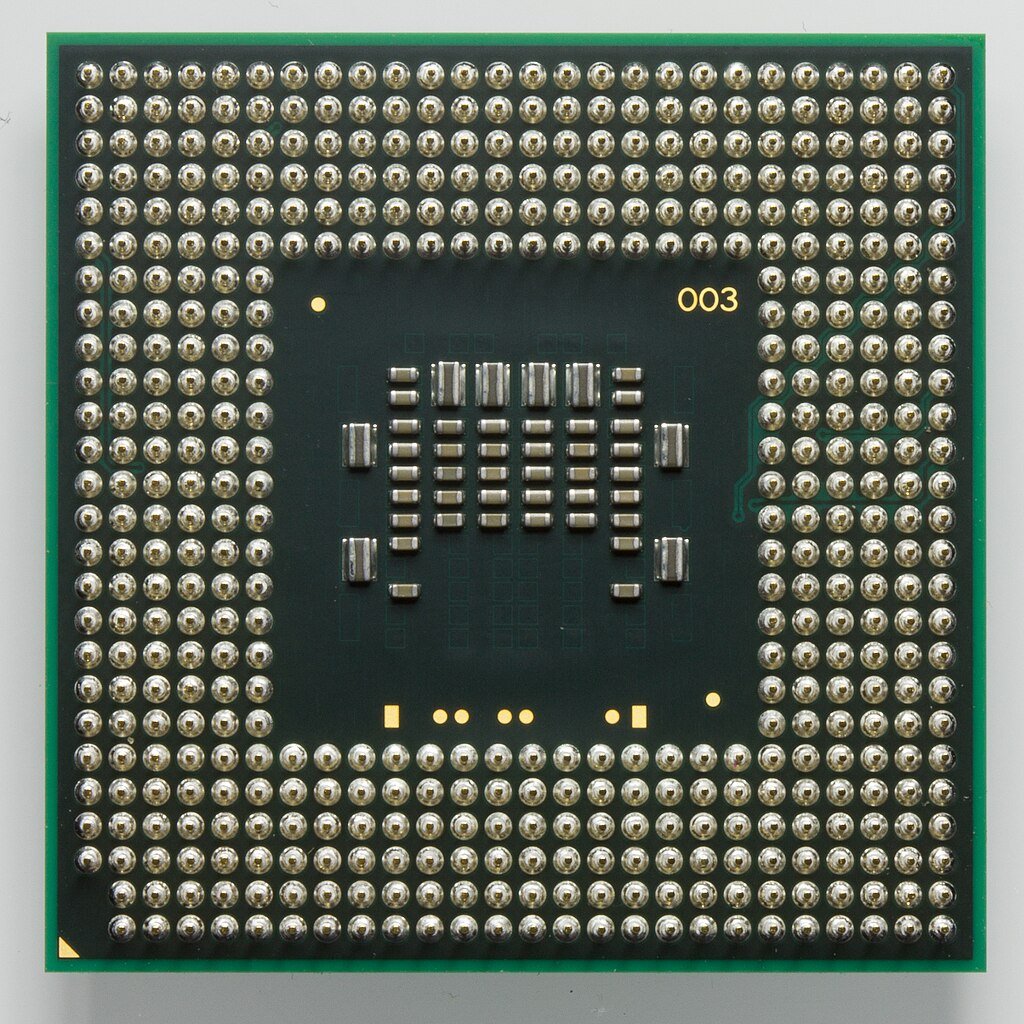

As technology marched forward, the evolution of microprocessors took center stage, ushering in subsequent generations that consistently pushed the boundaries of computational capabilities. An impressive integration of more transistors marked these advancements and features onto increasingly compact chips.

A pivotal concept driving this relentless progress was encapsulated in Moore’s Law, a visionary proposition by Intel co-founder Gordon Moore. Enunciated in the mid-1960s, Moore’s Law foresaw a transformative trajectory for microprocessor development. The law predicted that the transistor density on a chip would double approximately every two years, leading to an exponential surge in computational power.

This forecast proved to be a guiding principle for the semiconductor industry, catalyzing relentless innovation and fostering an environment of continuous improvement. The adherence to Moore’s Law became a driving force behind the shrinking size of transistors and the simultaneous increase in their numbers, resulting in microprocessors that not only became more powerful but also more energy-efficient. This phenomenon became a cornerstone of the digital revolution, shaping the landscape of computing and enabling a myriad of technological marvels that define our contemporary era.

The dawn of the microprocessor stands as a pivotal milestone in computing history, propelling it from a niche realm to an indispensable facet of daily existence. This transformative technology, exemplified by innovations like the Intel 4004, revolutionized the computing landscape by condensing immense processing power into a single chip. Subsequently, the relentless evolution of microprocessor technology has left an indelible imprint on the modern digital sphere. Its influence extends beyond traditional computing, permeating realms like communication, where smartphones and networks thrive on advanced microprocessors.

Entertainment experiences, from gaming to multimedia streaming, are enriched by these powerful chips. Moreover, industries benefit from enhanced efficiency and productivity as microprocessors drive automation and sophisticated data processing. In essence, the journey from the microprocessor’s dawn to its current zenith has intricately woven computing into the fabric of everyday life, shaping how we communicate, entertain ourselves, and conduct business in the contemporary world.