Artificial Intelligence (AI) has emerged as one of the most transformative technologies of the modern era, revolutionizing industries, shaping our daily lives, and redefining the boundaries of what machines can achieve. To truly appreciate the profound impact of AI, it is essential to delve into its past and trace the remarkable journey through the timeline of artificial intelligence. This journey is a fascinating exploration of the history of AI, its evolution, and the groundbreaking milestones that have brought us to where we are today.

The history of AI is a testament to human ingenuity and the relentless pursuit of creating intelligent machines. It begins with the earliest inklings of AI concepts in the mid-20th century when visionary pioneers like Alan Turing and Norbert Wiener laid the theoretical foundations for what would become AI. Their work on computation, machine learning, and cybernetics set the stage for the grand narrative of AI’s development.

As we embark on this journey through the history of AI, we will witness its humble beginnings in rule-based systems and symbolic reasoning during the 1950s and 1960s. These early AI systems, known as expert systems, were the precursors to more sophisticated and generative AI models we have today.

The evolution of AI gained momentum with the advent of neural networks and connectionism in the 1980s and 1990s. Researchers like Geoffrey Hinton and Yann LeCun pioneered the development of these networks, providing a basis for generative AI that could mimic human thought processes and generate data.

The 2010s ushered in the era of deep learning and Generative Adversarial Networks (GANs), propelling AI to new heights. GANs, introduced by Ian Goodfellow, enabled machines to generate highly realistic content, be it images, text, or music, through adversarial training.

The late 2010s saw the rise of large language models, exemplified by models like GPT (Generative Pre-trained Transformer). These models marked a significant turning point, showcasing the immense potential of AI in understanding and generating human-like text.

As we navigate the captivating timeline of artificial intelligence, we will unravel the fascinating stories, breakthroughs, and challenges that have shaped its evolution. From its nascent origins to the present-day AI renaissance, this journey is a testament to human curiosity and the unceasing quest to create intelligent machines that can augment and enhance our lives in unprecedented ways.

Table of Contents

What is generative AI and example?

Generative AI, short for Generative Artificial Intelligence, which refers to a class of artificial intelligence systems designed to create or generate new content that mimics human-generated content across various domains, such as text, images, music, and more. These systems leverage machine learning techniques, including neural networks, to produce data that appears authentic and creative. They are capable of autonomously producing content, often by learning patterns and structures from large datasets, making them versatile tools in numerous applications.

One prominent example of generative AI is the use of Generative Adversarial Networks (GANs). GANs consist of two neural networks, a generator, and a discriminator, engaged in a competitive learning process. The generator attempts to create content (e.g., images), while the discriminator evaluates its authenticity. Over time, the generator improves its output to the point where it can generate highly realistic and convincing content, such as lifelike portraits, deepfake videos, or even entirely synthetic landscapes.

Another example is the use of large language models like OpenAI’s GPT (Generative Pre-trained Transformer), which excels in generating human-like text. These models can write articles, compose poetry, answer questions, and even engage in natural-sounding conversations.

Generative AI has transformative potential across industries, from creative content generation and art to healthcare and finance. However, its capabilities also raise ethical concerns, such as the creation of misleading information and deepfakes, which emphasize the importance of responsible and ethical AI development and deployment.

How did generative AI develop?

Generative AI, the ability of machines to create content that resembles human-generated data, has developed through several key stages:

Early Neural Networks

The foundation for generative AI was laid with the development of neural networks in the 1950s and 1960s. Researchers explored the idea of creating artificial neurons that could simulate human thought processes.

Recurrent Neural Networks (RNNs)

In the 1980s and 1990s, RNNs emerged as a key technology for sequential data processing. They allowed for the generation of text and sequences, although their limitations, such as vanishing gradients, hindered their effectiveness.

Deep Learning Resurgence

In the 2000s, deep learning experienced a resurgence, thanks to advances in computational power and the availability of large datasets. This led to the development of more complex neural networks capable of handling generative tasks.

Generative Adversarial Networks (GANs)

Introduced by Ian Goodfellow in 2014, GANs brought a significant breakthrough in generative AI. GANs consist of a generator and a discriminator network that compete with each other. They have been used for generating realistic images, videos, and more.

Transformer Models

The introduction of the Transformer architecture in 2017 marked a major milestone. Models like BERT and GPT, based on the Transformer architecture, revolutionized natural language processing and text generation.

Large Pre-trained Models

The late 2010s saw the development of large pre-trained models like GPT-3, which have achieved remarkable capabilities in generating human-like text across various domains.

Generative AI has evolved from early neural network concepts to sophisticated models like GPT-3, capable of generating high-quality text, images, and more. Ongoing research continues to refine and expand the capabilities of generative AI, making it an integral part of applications ranging from content creation and language translation to creative arts and beyond.

What are early adopters of generative AI?

Early adopters of generative AI encompass a diverse range of industries and innovators who recognize the potential of generative AI technology and embrace it in its nascent stages. These pioneers leveraged generative AI to push the boundaries of creativity, problem-solving, and automation.

Technology Companies

Tech giants like Google, Microsoft, and Facebook were early adopters. incorporated generative AI into various products and services, such as language translation, content generation, and image synthesis.

Creative Industries

The entertainment and creative sectors embraced generative AI for music composition, video game design, and art generation. Musicians, artists, and game developers used AI to enhance their creative processes.

Healthcare

In the medical field, researchers used generative AI for drug discovery, medical image analysis, and patient data prediction to improve patient care and treatment.

Financial Services

Early adopters in finance utilized generative AI for fraud detection, risk assessment, and algorithmic trading to gain a competitive edge and enhance security.

Automotive

Companies in the automotive sector employed generative AI for autonomous vehicle development, design optimization, and predictive maintenance.

Content Generation

Content creators, marketers, and e-commerce businesses harnessed generative AI for generating product descriptions, advertisements, and personalized recommendations.

Robotics

In robotics, generative AI aided in motion planning, simulation, and the development of more intelligent and adaptable robotic systems.

These early adopters recognized that generative AI had the potential to disrupt and innovate their respective fields, leading to efficiency gains, new opportunities, and novel applications. Their pioneering efforts paved the way for the widespread adoption and continued advancement of generative AI technologies in various sectors.

A Journey Through Generative AI History

The history of generative AI is a fascinating journey that spans several decades, with roots in early computer science and artificial intelligence research. Generative AI, in essence, refers to the ability of machines to generate content, whether it be text, images, music, or other forms of data, that resembles human-created content. Here’s an overview of how generative AI evolved from its early research stages:

Early Beginnings (1940s-1950s)

In the 1940s, during the midst of World War II, the seeds of artificial intelligence (AI) and machine learning were sown by visionary figures like Alan Turing and Norbert Wiener, albeit their primary focus at the time was not generative AI. Their pioneering work laid the theoretical foundations for the development of intelligent machines and the study of computational intelligence.

Alan Turing, a British mathematician and computer scientist, is renowned for his Turing machine concept, which essentially introduced the fundamental principles of computation. Turing’s theoretical work during the 1930s and 1940s, including his famous Turing Test proposal in 1950, set the stage for thinking about how machines could simulate human-like intelligence. While his focus was on the broader concept of AI and its potential to replicate human thought, he did not specifically delve into generative AI as we understand it today.

Norbert Wiener, an American mathematician, and philosopher, is credited with laying the groundwork for the field of cybernetics, which explored the interplay between machines and living organisms. His work on feedback systems and control mechanisms provided a theoretical framework that would later influence AI research, particularly in areas related to machine learning and adaptive systems.

It’s important to note that during the 1940s, the practical implementation of AI and generative AI was far from feasible due to limitations in computing power and data availability. However, the pioneering ideas and theoretical contributions of Turing, Wiener, and others of their era set the stage for the subsequent development of AI technologies, including generative AI, in the decades that followed.

Early AI Research (1950s-1960s)

In the nascent days of artificial intelligence research during the 1950s and 1960s, a group of visionary researchers, including John McCarthy, Marvin Minsky, and Allen Newell, played instrumental roles in shaping the field. This period marked the birth of AI as a formal discipline, and these pioneers laid the foundation for subsequent developments in AI technology.

John McCarthy, often referred to as the “father of AI,” was a central figure in this era. He coined the term “artificial intelligence” and organized the Dartmouth Workshop in 1956, which is widely regarded as the birth of AI as a field. McCarthy’s work primarily focused on the development of the Logic Theorist program, which could prove mathematical theorems and was one of the earliest AI programs.

Marvin Minsky, known for his cognitive research, contributed to early AI by co-founding the Massachusetts Institute of Technology’s (MIT) AI Laboratory in 1959. He worked on foundational concepts like neural networks and symbolic reasoning, which became cornerstones of AI research.

Allen Newell, along with Herbert A. Simon, developed the Logic Theorist and later, the General Problem Solver (GPS). These programs aimed to mimic human problem-solving capabilities using symbolic representations and rules.

During this period, the predominant approach in AI was rule-based systems and symbolic reasoning, wherein knowledge was represented using symbols and manipulated according to logical rules. These early AI programs laid the groundwork for later developments in expert systems, machine learning, and the broader field of artificial intelligence. While rule-based AI has evolved into more data-driven and probabilistic approaches in modern AI, the foundational work of McCarthy, Minsky, Newell, and their contemporaries remains integral to the history and evolution of AI.

Expert Systems (1970s-1980s)

The development of expert systems in the field of artificial intelligence marked a significant milestone in the history of AI research. These systems represented a departure from earlier symbolic and rule-based AI approaches by focusing on encoding human expertise and domain-specific knowledge into computer programs. While expert systems were instrumental in many practical applications, it is essential to clarify that they were not generative AI in the modern sense.

Expert systems were designed to solve well-defined problems within specific domains, such as medical diagnosis, financial analysis, or engineering. They operated by using a knowledge base of facts and rules, coupled with an inference engine to reason and make decisions based on the provided data. The primary goal was to replicate human decision-making processes in a limited, rule-driven manner.

In contrast, generative AI, as we understand it today, involves the creation of entirely new and original content. It goes beyond rule-based systems to enable machines to generate creative outputs, whether it’s generating natural language text, synthesizing images, composing music, or other forms of content creation. Generative AI leverages deep learning techniques and large datasets to learn patterns and generate novel content autonomously, often with impressive human-like qualities.

While expert systems laid the foundation for AI applications in various fields, generative AI represents a newer paradigm that emphasizes creativity, natural language understanding, and content generation, making it a distinct and transformative advancement in the AI landscape.

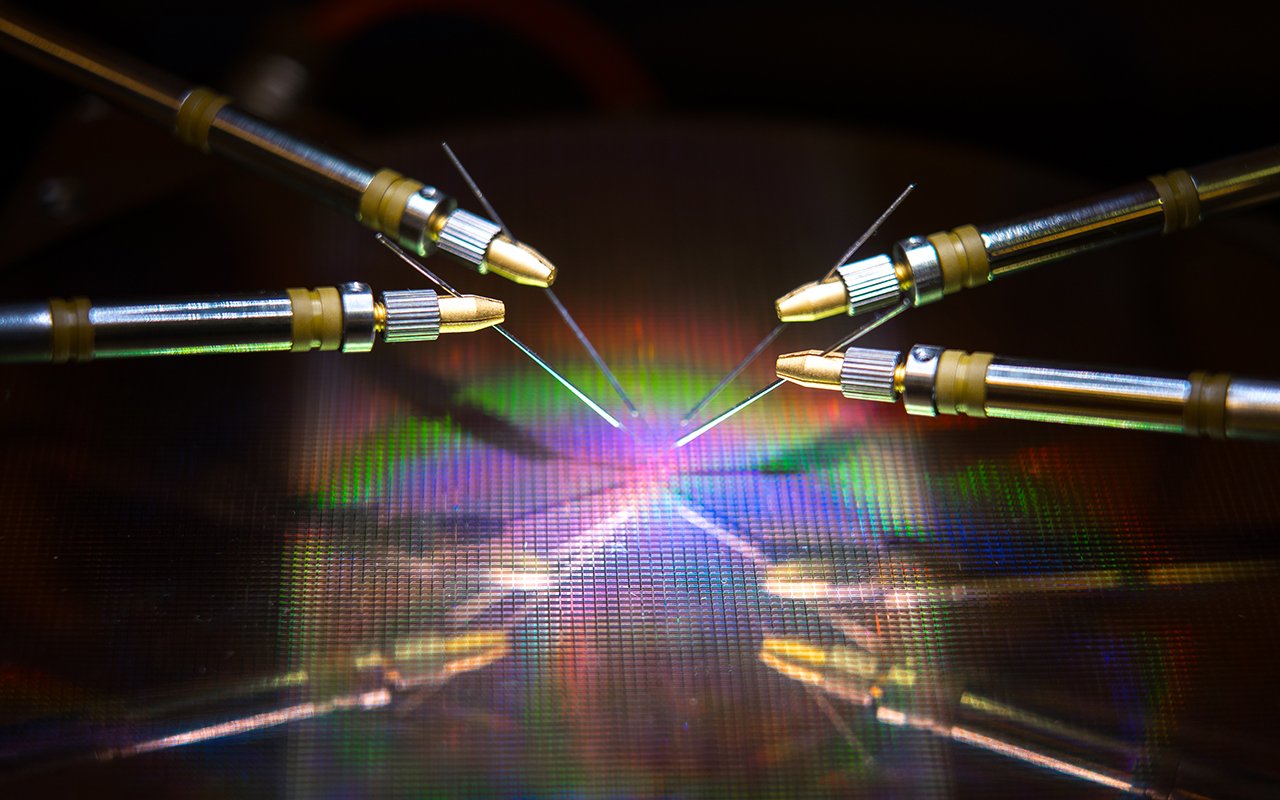

Neural Networks and Connectionism (1980s-1990s)

The emergence of neural networks and connectionism in the 1980s and 1990s marked a significant turning point in the field of artificial intelligence and ignited renewed interest in generative AI. During this era, researchers like Geoffrey Hinton and Yann LeCun played pivotal roles by making groundbreaking contributions to the development and understanding of neural networks, which ultimately paved the way for more sophisticated generative models.

Geoffrey Hinton, often referred to as the “Godfather of Deep Learning,” made substantial strides in the field by pioneering the concept of backpropagation, a crucial algorithm for training neural networks. Hinton’s work laid the foundation for training deeper and more complex neural networks, allowing for the representation of intricate patterns and features in data. His research became instrumental in developing generative models that could produce realistic content, such as images and text.

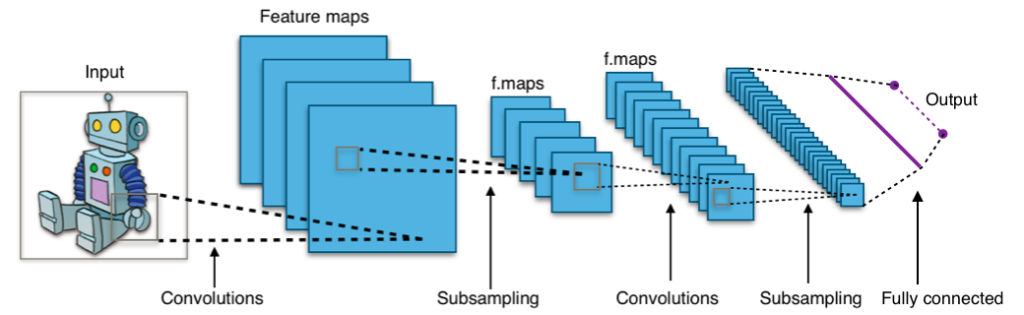

Yann LeCun, another luminary in the field, made substantial contributions to convolutional neural networks (CNNs), which are particularly adept at processing grid-like data, such as images and video. CNNs have proven indispensable in generative AI, enabling the creation of impressive image generation models.

These advancements in neural networks and connectionism led to a renewed enthusiasm for generative AI. Researchers began to explore the potential of neural networks in generating content that resembled human-created data. This era laid the groundwork for subsequent breakthroughs, including the development of Generative Adversarial Networks (GANs) and other generative models, which have since reshaped numerous industries and applications, from computer vision to natural language processing, and continue to push the boundaries of AI creativity.

Recurrent Neural Networks (RNNs) and Sequence Generation (2000s)

Recurrent Neural Networks (RNNs) represent a pivotal advancement in the realm of artificial intelligence, particularly in the context of handling sequential data. Their popularity surged due to their inherent ability to capture temporal dependencies and contextual information within data sequences, making them invaluable in various applications, including natural language processing and speech recognition.

RNNs are designed with a recurrent structure, allowing them to maintain a hidden state that evolves as they process each element of a sequence. This hidden state serves as a form of memory, retaining information from prior time steps and influencing the predictions or outputs at subsequent time steps. This architecture enables RNNs to excel in tasks where the order of data elements matters, such as language modeling, sentiment analysis, and machine translation.

In the context of generative AI, RNNs played a crucial role in generating sequences of text. Language models built on RNNs could produce coherent and contextually relevant text, making them foundational in tasks like text generation, dialogue systems, and even creative writing.

However, RNNs also have their limitations, such as the vanishing gradient problem, which can hinder their ability to capture long-range dependencies in data. This limitation led to the development of more advanced models like LSTMs (Long Short-Term Memory) and GRUs (Gated Recurrent Unit), which mitigated the vanishing gradient issue and further improved the capabilities of generative AI systems.

While RNNs have been partially eclipsed by more recent advances, such as Transformer-based models like GPT-3 and BERT, their contributions to the field of generative AI remain significant. They represent a crucial step in the evolution of AI technologies, paving the way for more sophisticated and capable models that continue to shape the future of artificial intelligence and natural language understanding.

Deep Learning and Generative Adversarial Networks (GANs) (2010s)

Deep learning techniques, particularly Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs), have indeed sparked a revolution in the field of generative AI. GANs, a groundbreaking invention introduced by Ian Goodfellow and his colleagues in 2014, represent a significant leap forward in the realm of generative models.

At the heart of GANs is a fascinating concept: the adversarial training of two neural networks—the generator and the discriminator. These networks engage in a continuous duel, where the generator’s primary objective is to create data (e.g., images) that is indistinguishable from real, human-generated data, while the discriminator aims to differentiate between genuine and generated content. This adversarial dynamic leads to a competitive learning process, driving both networks to improve continually.

GANs have demonstrated exceptional capabilities in generating realistic images, often blurring the line between machine-generated and human-created content. This ability has found applications across various domains, including art, fashion, video game design, and even medicine. For instance, artists have used GANs to produce unique artworks, fashion designers have employed them for creative designs, and medical professionals have leveraged GANs to generate synthetic medical images to aid in diagnosis and treatment planning.

The versatility of GANs has also extended to addressing practical challenges. They have been applied in generating high-quality synthetic data for training machine learning models, enhancing the performance of computer vision tasks, and simulating real-world scenarios for training autonomous systems.

Transformer Models and BERT (2017-2018)

Transformer models have emerged as a groundbreaking paradigm shift in the field of natural language processing (NLP) and have made a significant impact on the evolution of generative AI. The Transformer architecture, introduced by Vaswani et al. in a 2017 paper, marked a departure from traditional sequence-to-sequence models and recurrent neural networks (RNNs).

At the core of the Transformer’s innovation is the attention mechanism, which enables the model to weigh the importance of different parts of the input sequence when making predictions. This self-attention mechanism allows Transformers to capture long-range dependencies in data, making them highly effective for tasks involving sequential information.

One of the most notable offspring of the Transformer architecture is BERT (Bidirectional Encoder Representations from Transformers). BERT, introduced by Google AI researchers in 2018, introduced the concept of pre-training and fine-tuning for NLP tasks. By pre-training on a massive corpus of text data and then fine-tuning on specific downstream tasks, BERT achieved state-of-the-art results in various NLP benchmarks.

These Transformer-based models have revolutionized generative AI in NLP. They excel in machine translation, where they can handle both source and target languages effectively. Text generation tasks, such as chatbot responses and content generation, benefit from the rich contextual understanding they provide. Furthermore, language understanding tasks, like sentiment analysis and question-answering, have seen significant improvements due to the pre-trained representations these models offer.

Large Language Models (Late 2010s-Present)

Models like GPT (Generative Pre-trained Transformer) represent a significant leap forward in the field of generative AI. These models have garnered immense prominence due to their remarkable ability to generate human-like text. The key to their success lies in a two-step process: pre-training and fine-tuning.

In the pre-training phase, GPT models are exposed to massive amounts of text data from the internet. This exposure enables them to learn the nuances of language, grammar, context, and even cultural references. GPT models develop a vast reservoir of knowledge about the world, making them capable of generating coherent and contextually relevant text.

The fine-tuning phase takes these pre-trained models and tailors them for specific tasks or domains. For instance, a GPT model can be fine-tuned for chatbot applications, medical diagnosis, or content generation in various industries. This fine-tuning process refines the model’s ability to generate text that aligns with the objectives of the task at hand.

GPT models have proven their mettle across a multitude of applications. In chatbots, they can engage in natural and context-aware conversations, providing valuable customer support and information. In content generation, they can automatically produce articles, product descriptions, and creative pieces, saving time and effort for content creators.

Furthermore, GPT-based models have found applications in fields such as language translation, sentiment analysis, and even creative writing. Their adaptability and versatility make them indispensable tools in the AI landscape, and as research and development in generative AI continue, we can expect even more impressive applications to emerge in the future. These models represent a significant milestone in the ongoing quest to bridge the gap between human and machine-generated content.

The history of generative AI is a testament to the remarkable evolution of artificial intelligence (AI) capabilities. Starting from early AI concepts, which laid the theoretical foundations, to the emergence of powerful generative models, the journey has been profound. Over the years, researchers and developers have tirelessly worked to enhance generative AI’s ability to create content across diverse domains such as text, images, music, and more.

Generative AI has already demonstrated its potential to generate human-like text, create realistic images, compose music, and even design entire virtual worlds. Applications spanned from chatbots and conversational agents to art creation, content recommendation systems, and scientific research.

In the ever-evolving landscape of AI, the exploration of generative models remains a dynamic and active area of research and development. Innovations in architecture, training techniques, and ethical considerations continue to propel generative AI forward, opening doors to unimaginable possibilities and further solidifying its position as a transformative force in the realm of artificial intelligence.